Popcorn.js

The Hyperaudio Pad – Next Steps and Media Literacy

By Mark Boas

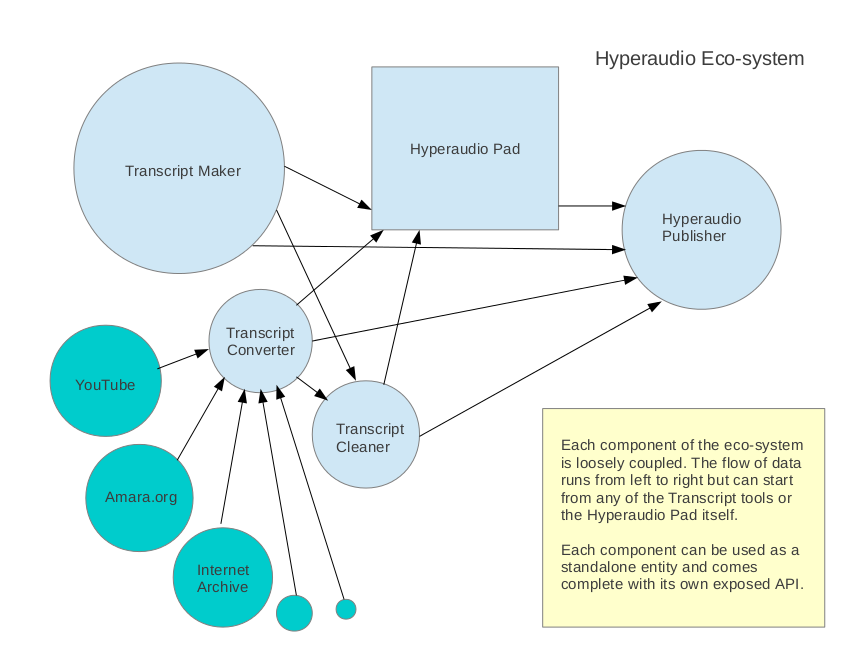

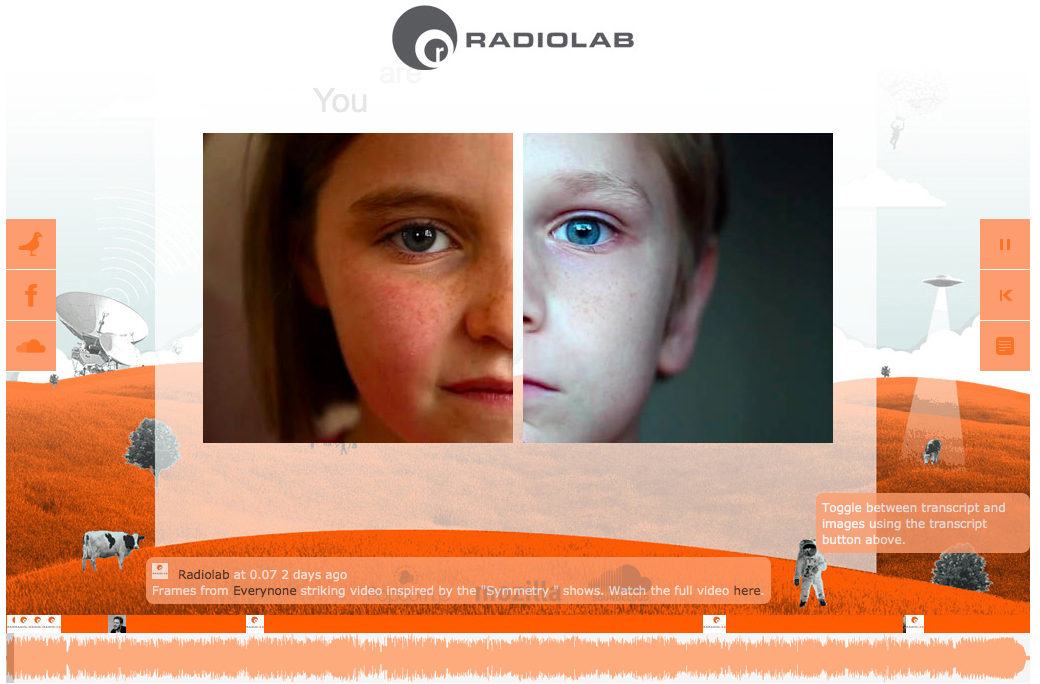

Hyperaudio is a term coined by Henrik Moltke. After discovering we had similar interests we started working on the concept a while back, resulting in a few demos such as the Radiolab demo for WNYC. That was a couple of years ago now. Since then I have been working with my colleague Mark Panaghiston on various Hyperaudio demos and thinking about how we can create an suite of tools I imaginatively call the Hyperaudio Ecosystem, at the center of that ecosystem sits the Hyperaudio Pad.

What is Hyperaudio?

But hey, let’s back up a bit. What is this Hyperaudio of which I speak? Simply put, Hyperaudio aims to do for audio what hypertext did for text – that is to integrate audio fully into the web experience. I wrote more about that here.

The Hyperaudio Pad is a tool that enables people to build up or remix audio and video using the underlying timed-transcripts. At the moment we’re concentrating on transcribing the spoken word.

Over the last seven days or so me and the other Mark have been working fairly solidly on taking the Hyperaudio Pad to the next level. It’s great to work with Mark P for several reasons. Obviously as key author of jPlayer he knows pretty much all there is to know about web based media, but also although we are both developers we are in many ways diametrically opposed. Mark is keen on code quality and doing things properly and I just want to get things out there. Happily the compromise we arrive at when we work together is a usually a good one. Mark is coming around to minimal-viable solutions and I have to admit that doing things right can save time in the long-run.

In fact there is a world of difference between earlier versions of the Hyperaudio Pad and that I largely developed and the version we have now after a week of collaboration.

Putting our weird Renée and Renato relationship aside for a bit, let’s talk a little about what it is that the Hyperaudio Pad is actually meant to do.

The Power of the Remix

OK, so key aims here are really to encourage the remixing of media in a new and refreshingly easy way – in doing so promote media literacy. On the subject of remixing at its importance in counter-culture I’d encourage you to check out RIP! : A Remix Manifesto and the excellent Everything is a Remix series.

Actually Brett Gaylor of Remix Manifesto fame is doing similar work by leading the effort building sequencing into Popcorn Maker. We’re approaching the same objective from completely different angles.

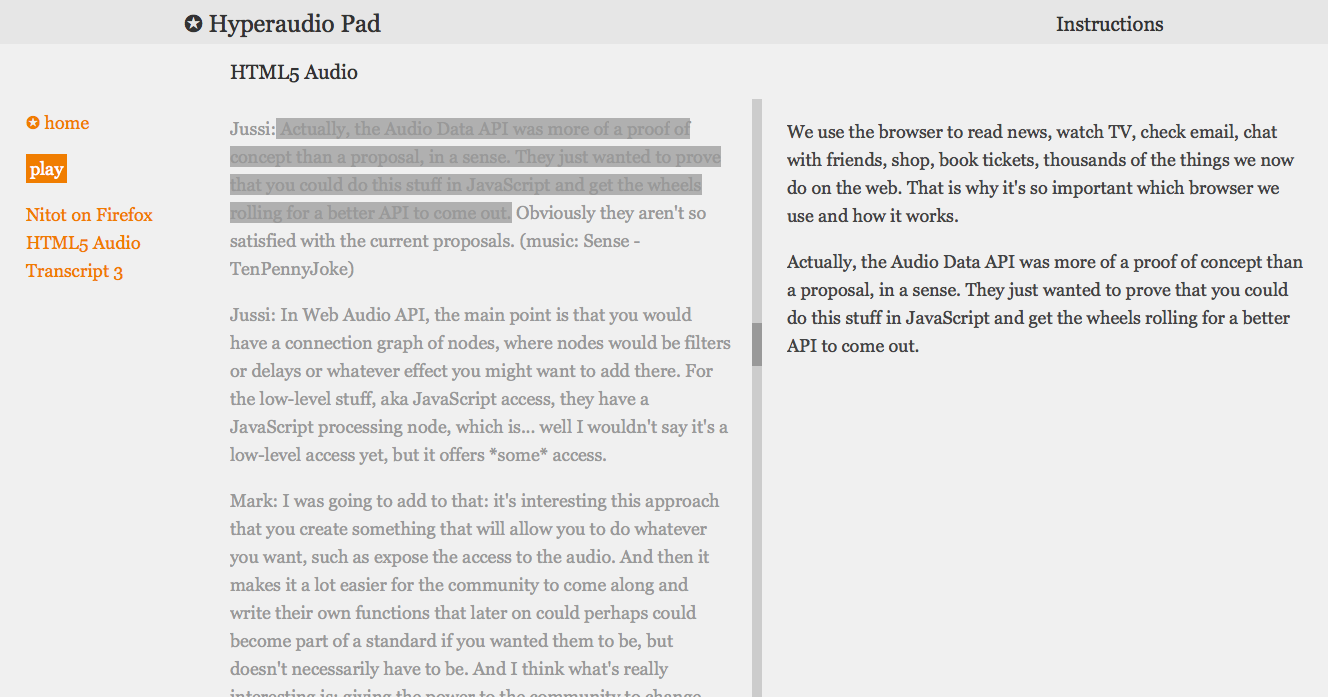

Although we use the Popcorn.js library at the heart of the Hyperaudio Pad, we use text to describe and represent media content. This may seem counter intuitive but actually transcripts can be a great way to navigate media content. Transcripts help break media out of it’s black box and we can scan and search through it relatively quickly. The Hyperaudio Pad borrows from the word-processor paradigm allowing people to copy blocks of transcript and associated media and further, allowing them to describe transitions, effects, adjust volumes using natural language that resemble editing directions in a script.

Media Literacy

As an example of some transitions and effects you can currently insert between clips:

[fade through black over 2 seconds, apply effects nightvision, scanlines, tvglitch] currently works.

which is equivalent to:

[fade black 2 apply nightvision scanlines tvglitch]

but we want to encourage the user to be descriptive as these directions are both human and computer readable.

Other effects include : ascii, colorcube, emboss, invert, noise, ripple, scanlines, sepia, sketch, vignette

This system will evolve of course, and we hope to allow stuff like:

[change volume to 50% over 3 seconds, change brightness to 20% over 2 seconds, play track 'love me do' from 30 seconds to 35 seconds, change track volume from 0 to 80% over 2 seconds]

(stuff like that)

Transcript snippets, combined with text described transitions and effects act as a kind of source code for the media.

Weak References

As cuts are nothing more than weak references to media files, start and end times and effects that are simply layered — in real-time — over the top, we can make additive remixes as easily as we can subtractive ones, that is to say, somebody could take a remix and enhance it by peeling back a specific snippet to reveal more, thereby adding to the piece.

In fact a key goal here is to allow remixing of remixes.

So a bit about more about the technology powering this. Effects are handled by Brian Chirls‘ seriously cool Seriously.js – a comprehensive video compositing library. The great thing about Seriously.js is that it is a modular system. We can add effects (and load their code dynamically) as we go. I also look forward to using some of our experience gained with the Web Audio API to apply realtime audio effects. The wider vision is to make a system that will take raw media, transcribe it and allow you to do all the things you could do with a professional video editing package.

We’ve got plenty more to do, certainly some bugs to iron out, but we’re steadily approaching beta. A lot has been restructured behind the scenes, giving us a more stable platform to build on and more quickly enhance. The question I posed and answered on Twitter. What do you call something between alpha and beta? Alpha.

Going Forward

We still plan to add the following features in the near future :

- alter brightness, contrast, saturation etc

- control and fade volume

- add audio track that can be played simultaneously or sequentially

- add effects/transitions in paragraph etc

- allow editing right down to the word, delete words

- allow fine tuning of start and end points

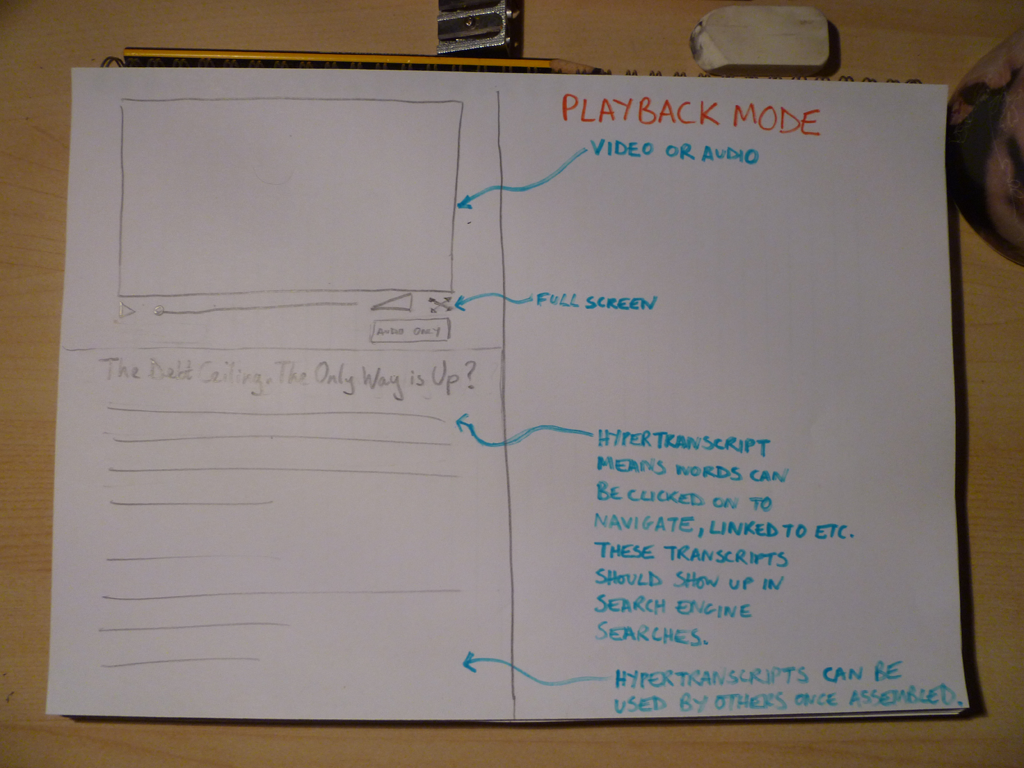

- allow the playback and sharing of fullscreen video remixes with an option to see the underlying ‘source code’.

If you want to play around yourself you can find the latest demo here.

Sourcecode is on GitHub (I should get around to adding an MIT license or something)

Or you can watch a screen recording of where we were at as of yesterday. Apologies for the choppy quality, oh and the background music, it seemed a good idea at the time.

Hyperaudio at the Mozilla Festival

This year has run contrary to previous years of my life in the sense that it seems to have lasted much longer than the year before. This may have something to do with a small addition to the family that arrived in March and the consequent ramping up of waking hours, but I prefer to think it’s because things are moving so quickly in that wonderful place we call the worldwide web, that it seems that too much has happened to compress into a year.

It was only a year ago for example, when I started tinkering with jPlayer to couple text and audio and was asked to show off a very small demo at the first Mozilla Festival in Barcelona last year.

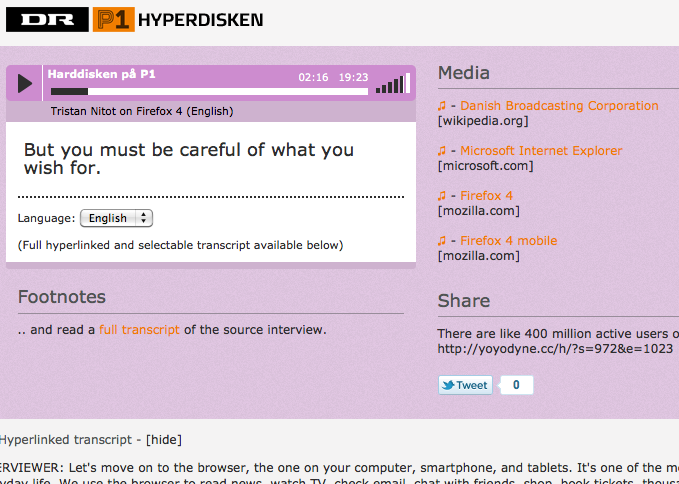

A few months later I was introduced to and became involved with something known as Hyperaudio and was given the opportunity to create a couple of proof-of-concept demos : Denmark Radio’s Hyperdisken Demo and the Radiolab/Soundcloud collaboration ( a shout-out to Henrik Moltke for doing much of the groundwork for these demos and my colleagues at Happyworm without which they just wouldn’t have been possible).

Further on through the arc of this epic year, I took part in the Mozilla Knight Journalism challenge where I was encouraged to research and blog some ideas on a tool I called the Hyperaudio Pad. Happily I was flown over to Berlin with other like minded MoJo peeps, for a week of intense discussion, collaboration and hacking and managed to get the first semblance of an actual product out of the door.

Which brings me in a round-about way to this year’s Mozilla Festival, where I will again be demoing and chatting about the coupling of text and media and the other things that Hyperaudio is (or could be) about. This all at the Science Fair on Friday, 4th of November and a Hyperaudio Workshop / Design Challenge on Saturday, 5th.

The Science Fair will be all about demos, chatting to people interested in the general Hyperaudio concept and making sure I don’t spill my drink over my laptop. Meanwhile in the workshop we hope to put the Hyperaudio Pad through it’s paces and actually make something with it, while gathering ideas and maybe hacking on some of them into the bargain. It should be a fun mix of hacks, hackers, designers, audio buffs and basically anybody curious enough to be involved. The session will be fairly free flow but the plan so far is to:

- Briefly chat about and demo the Hyperaudio concept, what it can do and with whom we can collaborate.

- Breakout into groups to come up with new ideas, applications and designs.

- Reconvene and talk about the group’s ideas.

- Breakout into groups to work on these ideas, whether it is creating a programme, UI mockups, story-boarding functionality or even developing small demos.

- Creating something we can show other people – hopefully a program created with the Hyperaudio Pad but also some new ideas.

Hopefully we can cram this all into 3 hours. Folk from the BBC, Universal Subtitles, Sourcefabric and other cool people are planning to be in attendance, which should make for some interesting crossovers and fantastic opportunities to consult and maybe even influence organisations in a position to use the stuff we’re making. Additionally the BBC are providing us with some fantastic media to work with.

All will be revealed over the weekend, book your place and get your eyes and ears ready to be part of the Hyperaudio experience.

The Hyperaudio Pad – a Software Product Proposal

Imagine

Imagine that making your own video or audio news programs was really easy.

Imagine if you could pull together news stories from various sources, combine them and publish the results in minutes.

Imagine that the story you made came complete with a transcript, was fully accessible and could be picked up by search engines.

Imagine that you could easily embed your story into a web page and that others could incorporate your story into new stories.

Imagine that viewers of you story could share any part of it in seconds.

Imagine all this and you are imagining the functionality of the Hyperaudio Pad.

Listen to a use case.

Watch the screencast.

Hypertranscripts

Currently, the process of putting together a media program, the process will probably involve some fairly complex audio/video editing software. The result – a fairly ‘static’ representation. The whole process is time consuming, wasteful and inflexible.

By changing the way we think about media, we can create a new way of manipulating it. The Hyperaudio Pad allows quick manipulation of media and takes advantage of a form of media representation referred to as a hypertranscript.

When we tell a story we invariably use the spoken word, the spoken word can be transcribed, and once transcribed can be used as an accurate reference to the media it is associated with.

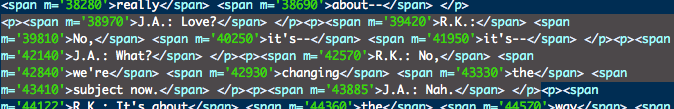

Hypertranscripts are part of a broader concept dubbed Hyperaudio by Henrik Moltke, they are transcripts hyper-linked to the media they represent, a type of fine-grained subtitle, they are separate entities from the media they describe, existing as a word-level aligned references expressed in HTML.

Tools and services exist to create Hypertranscripts, word level timing can even be approximated from subtitles. In the past I have used libraries such as Popcorn.js, jPlayer and data from the Universal Subtitles project.

The good news is that Hypertranscripts already exist, here are a few examples:

Screencast [youtube] of the Danish Radio Demo

Screencast [youtube] of the Radiolab Demo.

Quick and simple demo I put together.

Demos by others include: Minnesota Public Radio Demo, Voxallead News and Liris Interactive Transcript

Using Hypertranscripts we can:

- Navigate through the media via the text.

- Select and play parts of the media by highlighting the relevant parts of the text.

- Share parts of the media creating URLs that contain start and end points.

- Make our media more discoverable (via search engines)

- Make our media more accessible.

- More easily visualize the media.

- Combine, embed and easily integrate media (using simple tools)

The Hyperaudio Pad

The Hyperaudio Pad is a tool to allow users to easily assemble spoken word based audio or video from Hypertranscripts.

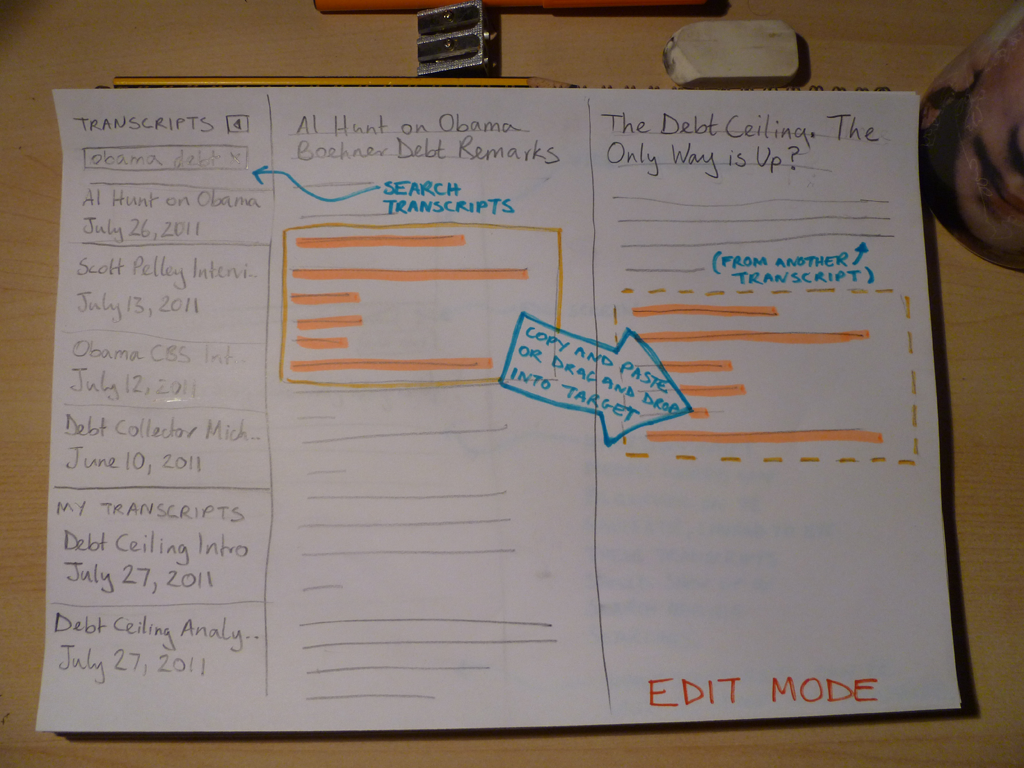

It will allow users to create their media by simply copying and pasting text from existing transcripts into a new transcript. These Hypertranscripts link to the actual media that they transcribe, so when parts of the transcripts are copied the references to their associated media are also copied.

Note that the media itself is not copied, just references to parts of it, the result is mash-up of video or audio and an associated representative transcript.

The tool will be :

- Web based, cross-browser, cross-platform (work on mobile devices) and open source.

- Simply presented, taking cues from minimalist distraction-free tools such as iAWriter.

- Intuitive and extremely easy to use, taking advantage of the text editing paradigm.

- Extensible – written in such a way that it can easily be built upon.

The interface should be clear and minimal, the user can search for and choose from existing hypertranscripts, open them, play them and copy parts of them to their ‘document’ all from within the same page.

Edit Mode

Playback Mode

Who is this tool for?

- Newsroom journalists who need to very quickly assemble media from different existing sources and perhaps adapt the result as news unfurls.

- Podcasters, citizen journalists and mediabloggers who want a free and easy way of putting together media programs or newscasts.

- Anyone who wants their resulting media programs to include a transcript for increased accessibility and visibility.

- Anybody who is happy for their results to be taken apart, re-combined or referenced by others.

How does it work ?

Hypertranscripts are essentially defined in HTML. The simplest possible example for the use by a tool such as the Hyperaudio Pad could be:

WORD-FROM-THE-TRANSCRIPT

Example:

burgeoning

Note, we can add to this format should we wish to include meta and other data, for example :

WORD-FROM-THE-TRANSCRIPT

(from Julien Doran‘s MetaFragment proposal document)

Transcripts will contain a series of these marked up words each individual word containing enough data to describe it’s associated media and at which time in the media it occurs.

Note that copying the Hypertranscript into any text editor will result in valid HTML and so can be used in other tools and applications external to the Hyperaudio Pad.

It is likely that the user’s resulting transcript will reference several different pieces of media and so it may beneficial for the application to ‘load’ and possibly cache the various media sources in advance for smoothest possible playback. We can achieve this by parsing the transcript after every save.

Future developments could include a simple scripting language that users can insert between transcripts to make transitions smoother and even the possibility to add background music or sound effects for example:

[fade out over 5 seconds]

or

[background fade in 'threatening music' over 3 seconds]

and then at the bottom

[reference 'threatening music' http://soundcloud.com/some.mp3]

You may say I’m a Dreamer

In order to start using the Hyperaudio Pad to its full potential we first need our media to be transcribed and to do this we need to make tools that make this easier and if possible free. However I believe that hypertranscripts deliver so many benefits that the incentive to transcribe media is high.

The Hyperaudio Pad is just one of many tools that could be built on the underlying Hypertranscript platform. To build this platform we should collaborate with others to :

- establish a standard markup for transcripts

- make the platform easy to build upon and enhance as new technologies become viable

- create a community with a pioneering spirit

- develop an ecosystem to facilitate the creation of a toolkit

During the course of this project it’s been a pleasure to find many other participants interested in the general theme of describing/transcribing media and utilizing the result. Over the last couple of weeks I have been collaborating with Julien Dorra, Samuel Huron, Nicholas Doiron and Shaminder Dulai. The excitement has been palpable and although only communicating virtually it felt like we were sparking off each other.

So I guess at least as far as this dream is concerned, I’m not the only one. ![]()

Further Experimentation with Hyper Audio

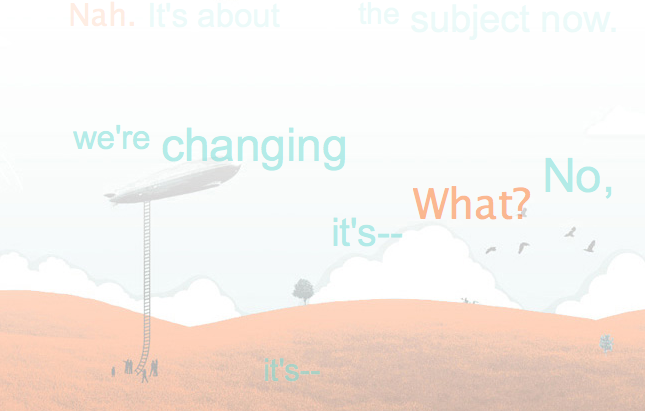

Following the fun we had making the Hyperdisken demo, I was happy to be asked by Mozilla, in collaboration with Radiolab and SoundCloud to help create another demo to show off the possibilities of hyper audio. This time we had an excellent Radiolab program as audio material and we wanted to get a little more ‘involved’. What was required was an application that would consist of many of the features of the Hyperdisken demo but also integrate deeply with SoundCloud API, and on top of this something extra, something to catch the eye.

I was fortunate again to work with the ideas-forge known as Henrik Moltke, who collaborated early on with Paul Rouget to produce something he dubbed the ‘Word River’ – a CSS3 manipulated flowing river of words that dynamically picked up content from an HTML transcript. We were also keen to make a pure HTML5 based solution and Paul helped figure out the hooks into the SoundCloud API that would allow us to achieve that. We were also very lucky to be given a great design by the multi-talented Lee Martin, SoundCloud’s experimenter extraordinaire.

So with proof of concept and some visual bling firmly in hand I was tasked with making this baby fly. Luckily I had help. SoundCloud engineers were at hand to answer any questions and crucially we had great support and code contributions from the popcorn.js group. I also managed to talk jPlayer author and all round JS media guru and of course, colleague Mark Panaghiston into giving me a hand. So despite the tight deadlines we were pretty much set.

Henrik has already blogged about the ideas and functionality that make up the demo. I want to write a little about the technology used.

Although I found out in retrospect, not strictly essential, we once again used jPlayer as our audio base, we’re familiar with it and we can move fast using it. It also meant that we could take much of the functionality developed in previous demos and plug it right in. Again, the excellent Popcorn.js was the engine that drove all the time based display of text and images and dealt with the parsing of data. Steven Weerdenberg, active Popcornista from Seneca College, very kindly wrote a plugin that grabbed, parsed and presented comments (amongst other things) from the track we used hosted on SoundCloud. This is where the Popcorn framework comes into its own as a plugin oriented architecture, something we took advantage of when we converted both the transcript and the word river functionality into plug-ins.

So where did the data come from? Well again the transcript HTML doubled as the source for richer interaction when used by the word river plugin. I like this approach I have to say. It means you don’t have to be a programmer to come in and immediately understand the content and change it accordingly. I also like the fact that the transcript is a separate HTML file, it pleases the separatist in me and means that it works as a standalone resource. We also used the standard speaker notations as a type of meta-data, the word river plugin hiding these parts for the purpose of display but using them to colour code each speaker’s text.

This part of the transcript:

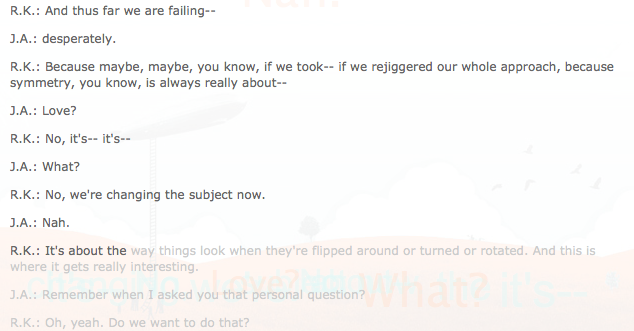

is used to create this ‘word river’:

and this interactive transcript :

Data-wise, everything else came via the SoundCloud API, this included their trademark wave-form, both ogg and mp3 audio sources and all of the comments. We also hijacked the comments to make a crude content management system. The idea being that any comments posted by the Radiolab account with references to images in them, were picked up and displayed as images in the main content area, and did not show up as comments on the timeline. If two images were present it meant they were square, one and it was ‘widescreen’ a blank image was used to remove images when they were no longer needed.

The last pieces of the puzzle and one we’ve still some polish to apply to, (if polishing puzzles makes any sense to you) was getting it all working on the majority of tablets and mobile devices. Since this demo didn’t use Flash this was actually a possibility and we got our web designer Silvia Benvenuti to come out of maternity leave and sort this out for us at the 9th hour, leaving me quite literally holding the baby.

This was a tough gig but all in all I’m happy with what we achieved, everyone seemed to really enjoy taking part in the process, and I certainly enjoyed bringing it all together. Hopefully it will inspire both program makers, designers and developers to come together and explore the limits of what hyper-audio can do. As Inspiral Carpets would say, moo!

Source code for this project and other demos can be found on github

Follow me on Twitter if you want to hear more about this sort of thing.

Hyper Audio – A New Way to Interact

Recently I had the privilege of working on a very interesting project with a few folk from Mozilla – it’s the type of project I love to work on, as it involves web audio and its deep integration into the general web experience.

Web audio is no longer consigned to being the passive play and pause experience of yesteryear, it has the potential to be much more, it can be a driver of much richer interactions, something Henrik Moltke explores with something he dubs Hyper Audio. The remit of the project was to take various media elements of a radio interview broadcast by Danish Radio station DR; audio, subtitles, transcripts, footnotes etc and link these in an intuitive and useful manner.

To say this project was right up my street would be an understatement – this project was in my flat, raiding my fridge and drinking my beerz. I was already fascinated by the concept. I’d been playing about, creating audio related demos for a couple of years and in November last year I decided to attend the Mozilla Drumbeat festival and created a demo for the event. The demo was accepted to be exhibited at the science fair on the opening evening and garnered some interesting feedback both on and offline, what it effectively demonstrated was the synchronization and bi-directional control of text and audio.

When Henrik asked me to work on this project, I naturally jumped at the opportunity. Due to time differences, pressing deadlines and the luxury of having a nice quiet office, I stayed up late most nights for a week, happily hacking away and helped out and supported by various Mozillians and the popcorn.js community.

So that’s the back-story, here’s the demo.

Some things to try :

- Switch the audio from English to Danish – it should continue from the same point in Danish, subtitles and the transcript should also change appropriately.

- Try clicking on words in the transcript – the audio should start playing from the corresponding point.

- Highlight a passage of transcript text – this should add a tweetable excerpt to the ‘share’ box. The URL included should just play that part of the audio.

- Clicking the music note icons in the ‘media’ box should take you to the point of the audio where that resource was mentioned.

How did we achieve this? We used popcorn.js to display subtitles, footnotes and other time-related resources. In fact a lot of this was already in place when I picked up the project. I then integrated jPlayer for the audio playback and deeper interaction. Popcorn allows us to associate timings with actions and have these actions triggered by media when they hit said timings. So pretty much perfect for our needs. jPlayer provided a solid abstraction above the native audio API, it allowed me to easily synchronize and switch audio tracks and jump to specific points or sections in the audio, with very few lines of code. Importantly it also protected us from any cross-browser issues and allowed our designers to effortlessly create a custom skin for the player.

So this was the control, but what about the media? Well this part was a massive team effort. Henrik managed to provide a very accurately timed transcript. We had hoped to use the subtitles in SRT format but for convenience we parsed them or rather Scott Downe parsed them into JSON format.

One of the bigger issues we encountered was that we only had the transcript in English and the timings for the Danish transcript were naturally different. Luckily we had accurately timed Danish subtitles and legendary Bobby Richter on hand to convert the subtitles to individual words complete with their timings, which he did by cunningly interpolating the timing of words (based on word length) and based on their in-subtitle position. All knocked out in about 10 minutes and in 20 lines of code. It worked surprisingly well, of course you need to be able to understand Danish to truly tell. We could have probably parsed the subtitles into the transcript on the fly but due to time limitations we made them static.

Perhaps an aside not directly related to audio, I managed to hack together some code that allowed highlighted transcript text to be placed in the ‘share’ box, and grab the timings of the first and last words, from there it was pretty much straightforward to make this excerpt tweetable.

This whole endeavor was very much a group effort, a huge thanks to the popcorn.js team, who made joining their IRC feel like walking into a pub full of friends.

Special credit and thanks then should go to Scott Downe, Bobby Richter, Barry Threw, David Humphrey, Brett Gaylor, Ben Moskowitz, Christian Valentiner, Silvia Benvenuti and of course Henrik ‘Tank’ Moltke whose baby all this was. It was great being part of such a talented team. Awesomesauce indeed.

Mark B

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design