javascript

Introducing the Hyperaudio Pad (working title)

Last week as part of the Mozilla News Lab, I took part in webinars with Shazna Nessa – Director of Interactive at the Associated Press in New York, Mohamed Nanabhay – an internet entrepreneur and Head of Online at Al Jazeera English and Oliver Reichenstein – CEO of iA (Information Architects, Inc.).

I have a few ideas on how we can create tools to help journalists. I mean journalists in the broadest sense – casual bloggers as well as hacks working for large news organizations. In previous weeks I have been in deep absorption of all the fantastic and varied information coming my way. Last week things started to fall into place. A seed of an idea that I’ve had at the back of my mind for some time pushed its way to the front and started to evolve.

Something that cropped up time and again was that if you are going to create tools for journalists, you should try and make them as easy to use as possible. The idea I hope to run with is a simple tool to allow users to assemble audio or video programs from different sources by using a paradigm that most people are already familiar with. I hope to build on my work something I’ve called hypertranscripts which strongly couple text and the spoken word in a way that is easily navigable and shareable.

The Problem

Editing, compiling and assembling audio or video usually requires fairly complex tools, this is compounded by the fact that it’s very difficult to ascertain the content of the media without actually playing through it.

The Solution?

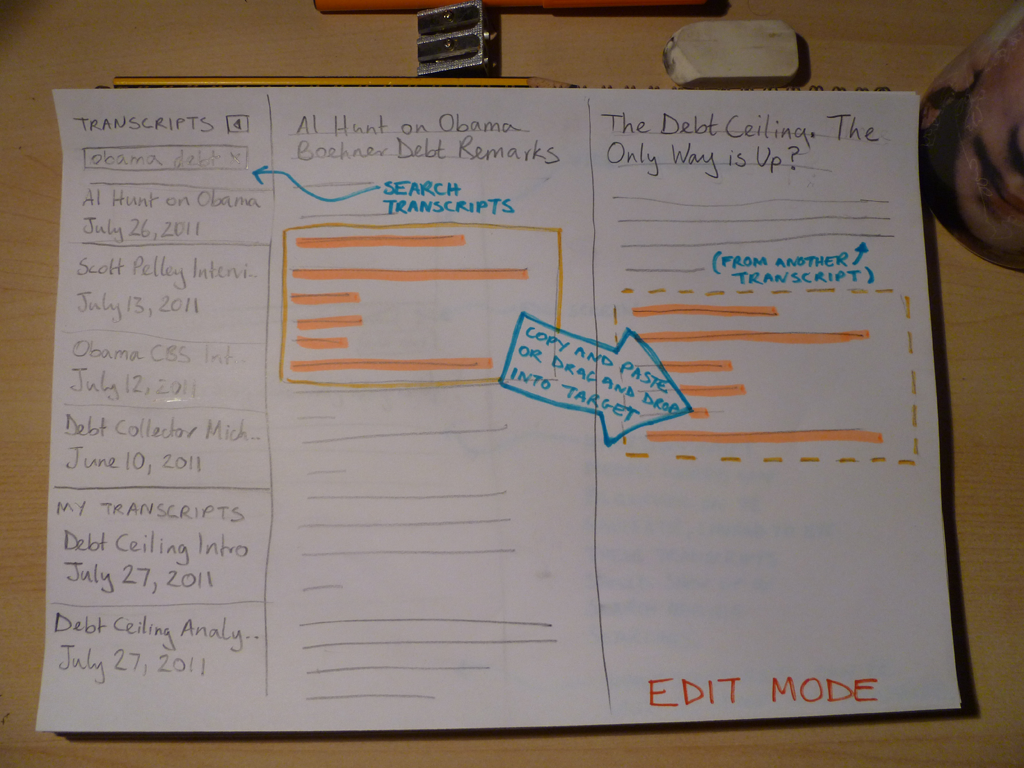

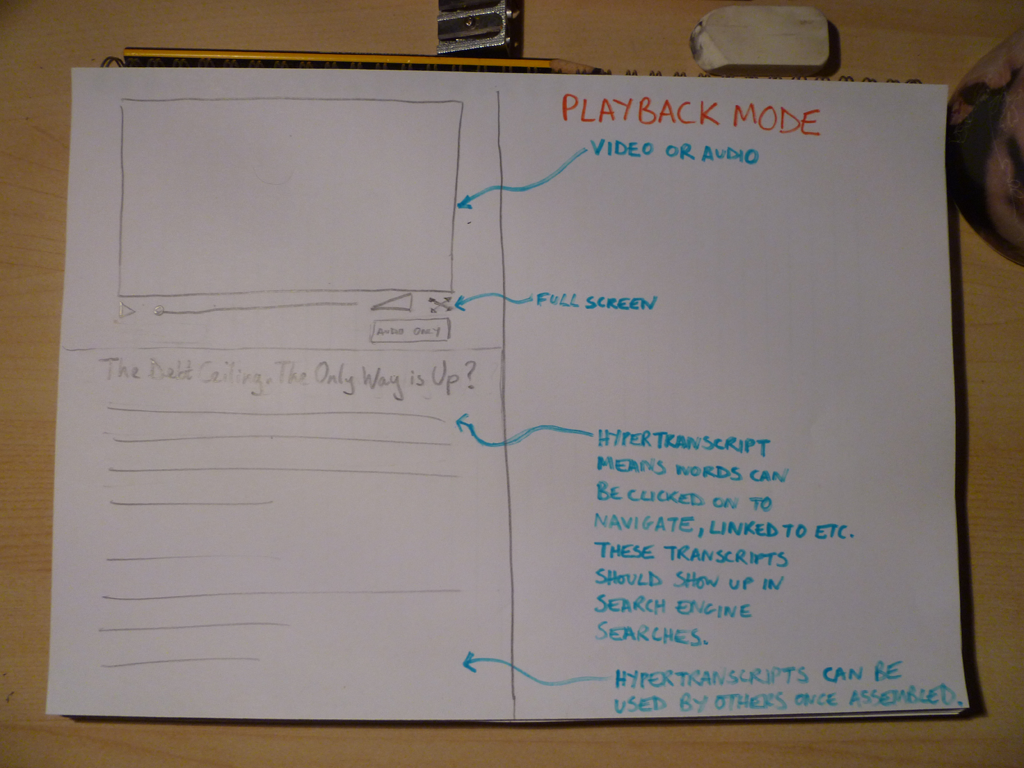

I propose that we step back and consider other ways of representing this media content. In the case of journalistic pieces, this content usually includes the spoken word which we can represent using text by transcribing it. My idea is to use the text to represent the content and allow that text to be copied, pasted, dragged and dropped from document to document with associated media intact. The documents will take the form of hypertranscripts and this assemblage will all work within the context of my proposed application, going under the working title of the Hyperaudio Pad. (Suggestions welcome!) Note that the pasting of any content into a standard editor will result in hypertranscripted content that could exist largely independently of the application itself.

Some examples of hypertranscripts can be found in a couple of demos I worked on earlier this year:

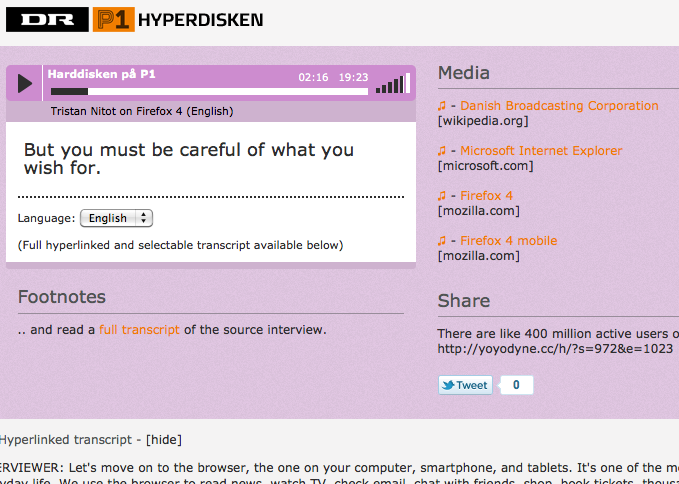

Danish Radio Demo

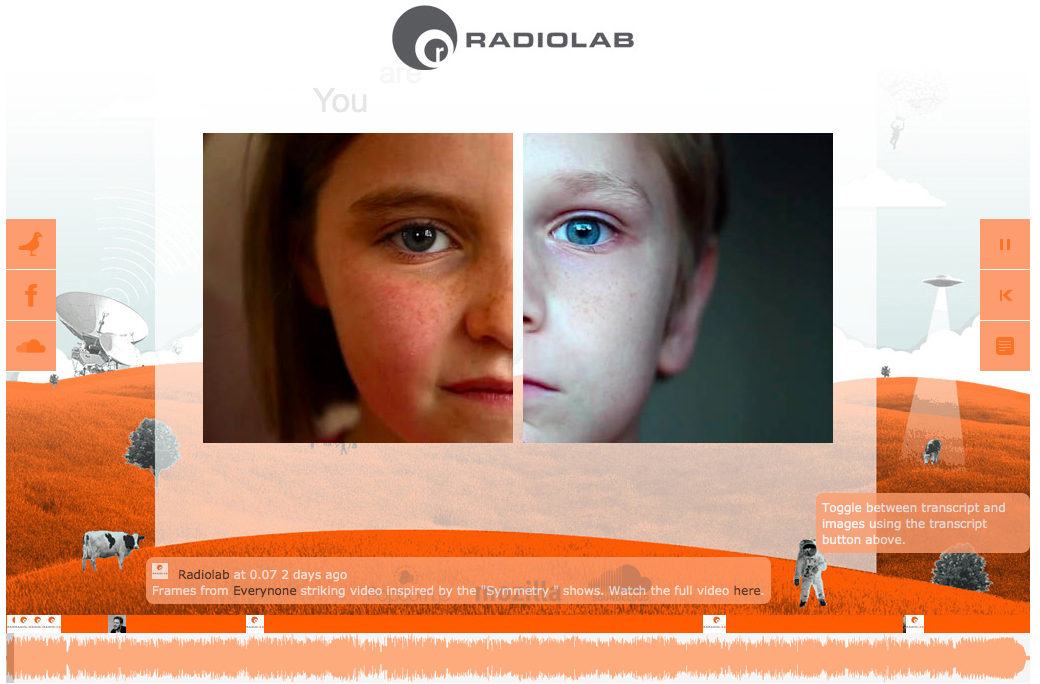

Radiolab Demo

As the interface is largely text based I’m taking a great deal of inspiration from the elegance and simplicity of Oliver’s iAWriter. Here are a couple of rough sketches :

Edit Mode

Playback Mode

Working Together

Last week I’m happy to say that I found myself collaborating with other members of the News Lab, namely Julien Dorra and Samuel Huron, both of whom are working on related projects. These guys have some excellent ideas that relate to meta-data and mixed media that tie in with my own and I look forward to working with them in the future. Exciting stuff!

Further Experimentation with Hyper Audio

Following the fun we had making the Hyperdisken demo, I was happy to be asked by Mozilla, in collaboration with Radiolab and SoundCloud to help create another demo to show off the possibilities of hyper audio. This time we had an excellent Radiolab program as audio material and we wanted to get a little more ‘involved’. What was required was an application that would consist of many of the features of the Hyperdisken demo but also integrate deeply with SoundCloud API, and on top of this something extra, something to catch the eye.

I was fortunate again to work with the ideas-forge known as Henrik Moltke, who collaborated early on with Paul Rouget to produce something he dubbed the ‘Word River’ – a CSS3 manipulated flowing river of words that dynamically picked up content from an HTML transcript. We were also keen to make a pure HTML5 based solution and Paul helped figure out the hooks into the SoundCloud API that would allow us to achieve that. We were also very lucky to be given a great design by the multi-talented Lee Martin, SoundCloud’s experimenter extraordinaire.

So with proof of concept and some visual bling firmly in hand I was tasked with making this baby fly. Luckily I had help. SoundCloud engineers were at hand to answer any questions and crucially we had great support and code contributions from the popcorn.js group. I also managed to talk jPlayer author and all round JS media guru and of course, colleague Mark Panaghiston into giving me a hand. So despite the tight deadlines we were pretty much set.

Henrik has already blogged about the ideas and functionality that make up the demo. I want to write a little about the technology used.

Although I found out in retrospect, not strictly essential, we once again used jPlayer as our audio base, we’re familiar with it and we can move fast using it. It also meant that we could take much of the functionality developed in previous demos and plug it right in. Again, the excellent Popcorn.js was the engine that drove all the time based display of text and images and dealt with the parsing of data. Steven Weerdenberg, active Popcornista from Seneca College, very kindly wrote a plugin that grabbed, parsed and presented comments (amongst other things) from the track we used hosted on SoundCloud. This is where the Popcorn framework comes into its own as a plugin oriented architecture, something we took advantage of when we converted both the transcript and the word river functionality into plug-ins.

So where did the data come from? Well again the transcript HTML doubled as the source for richer interaction when used by the word river plugin. I like this approach I have to say. It means you don’t have to be a programmer to come in and immediately understand the content and change it accordingly. I also like the fact that the transcript is a separate HTML file, it pleases the separatist in me and means that it works as a standalone resource. We also used the standard speaker notations as a type of meta-data, the word river plugin hiding these parts for the purpose of display but using them to colour code each speaker’s text.

This part of the transcript:

is used to create this ‘word river’:

and this interactive transcript :

Data-wise, everything else came via the SoundCloud API, this included their trademark wave-form, both ogg and mp3 audio sources and all of the comments. We also hijacked the comments to make a crude content management system. The idea being that any comments posted by the Radiolab account with references to images in them, were picked up and displayed as images in the main content area, and did not show up as comments on the timeline. If two images were present it meant they were square, one and it was ‘widescreen’ a blank image was used to remove images when they were no longer needed.

The last pieces of the puzzle and one we’ve still some polish to apply to, (if polishing puzzles makes any sense to you) was getting it all working on the majority of tablets and mobile devices. Since this demo didn’t use Flash this was actually a possibility and we got our web designer Silvia Benvenuti to come out of maternity leave and sort this out for us at the 9th hour, leaving me quite literally holding the baby.

This was a tough gig but all in all I’m happy with what we achieved, everyone seemed to really enjoy taking part in the process, and I certainly enjoyed bringing it all together. Hopefully it will inspire both program makers, designers and developers to come together and explore the limits of what hyper-audio can do. As Inspiral Carpets would say, moo!

Source code for this project and other demos can be found on github

Follow me on Twitter if you want to hear more about this sort of thing.

Hyper Audio – A New Way to Interact

Recently I had the privilege of working on a very interesting project with a few folk from Mozilla – it’s the type of project I love to work on, as it involves web audio and its deep integration into the general web experience.

Web audio is no longer consigned to being the passive play and pause experience of yesteryear, it has the potential to be much more, it can be a driver of much richer interactions, something Henrik Moltke explores with something he dubs Hyper Audio. The remit of the project was to take various media elements of a radio interview broadcast by Danish Radio station DR; audio, subtitles, transcripts, footnotes etc and link these in an intuitive and useful manner.

To say this project was right up my street would be an understatement – this project was in my flat, raiding my fridge and drinking my beerz. I was already fascinated by the concept. I’d been playing about, creating audio related demos for a couple of years and in November last year I decided to attend the Mozilla Drumbeat festival and created a demo for the event. The demo was accepted to be exhibited at the science fair on the opening evening and garnered some interesting feedback both on and offline, what it effectively demonstrated was the synchronization and bi-directional control of text and audio.

When Henrik asked me to work on this project, I naturally jumped at the opportunity. Due to time differences, pressing deadlines and the luxury of having a nice quiet office, I stayed up late most nights for a week, happily hacking away and helped out and supported by various Mozillians and the popcorn.js community.

So that’s the back-story, here’s the demo.

Some things to try :

- Switch the audio from English to Danish – it should continue from the same point in Danish, subtitles and the transcript should also change appropriately.

- Try clicking on words in the transcript – the audio should start playing from the corresponding point.

- Highlight a passage of transcript text – this should add a tweetable excerpt to the ‘share’ box. The URL included should just play that part of the audio.

- Clicking the music note icons in the ‘media’ box should take you to the point of the audio where that resource was mentioned.

How did we achieve this? We used popcorn.js to display subtitles, footnotes and other time-related resources. In fact a lot of this was already in place when I picked up the project. I then integrated jPlayer for the audio playback and deeper interaction. Popcorn allows us to associate timings with actions and have these actions triggered by media when they hit said timings. So pretty much perfect for our needs. jPlayer provided a solid abstraction above the native audio API, it allowed me to easily synchronize and switch audio tracks and jump to specific points or sections in the audio, with very few lines of code. Importantly it also protected us from any cross-browser issues and allowed our designers to effortlessly create a custom skin for the player.

So this was the control, but what about the media? Well this part was a massive team effort. Henrik managed to provide a very accurately timed transcript. We had hoped to use the subtitles in SRT format but for convenience we parsed them or rather Scott Downe parsed them into JSON format.

One of the bigger issues we encountered was that we only had the transcript in English and the timings for the Danish transcript were naturally different. Luckily we had accurately timed Danish subtitles and legendary Bobby Richter on hand to convert the subtitles to individual words complete with their timings, which he did by cunningly interpolating the timing of words (based on word length) and based on their in-subtitle position. All knocked out in about 10 minutes and in 20 lines of code. It worked surprisingly well, of course you need to be able to understand Danish to truly tell. We could have probably parsed the subtitles into the transcript on the fly but due to time limitations we made them static.

Perhaps an aside not directly related to audio, I managed to hack together some code that allowed highlighted transcript text to be placed in the ‘share’ box, and grab the timings of the first and last words, from there it was pretty much straightforward to make this excerpt tweetable.

This whole endeavor was very much a group effort, a huge thanks to the popcorn.js team, who made joining their IRC feel like walking into a pub full of friends.

Special credit and thanks then should go to Scott Downe, Bobby Richter, Barry Threw, David Humphrey, Brett Gaylor, Ben Moskowitz, Christian Valentiner, Silvia Benvenuti and of course Henrik ‘Tank’ Moltke whose baby all this was. It was great being part of such a talented team. Awesomesauce indeed.

Mark B

P2P Web Apps – Brace yourselves, everything is about to change

The old client-server web model is out of date, it’s on its last legs, it will eventually die. We need to move on, we need to start thinking differently if we are going to create web applications that are robust, independent and fast enough for tomorrow’s generation. We need to decentralise and move to a new distributed architecture. At least that’s the idea that I am exploring in this post.

It seems to me that a distributed architecture is desirable for many reasons. As things stand, many web apps rely on other web apps to do their thing, and this is not necessarily as good a thing as we might first imagine. The main issue being single points of failure (SPOF). Many developers create (and are encouraged by the current web model to create) applications with many points of failure, without fallbacks. Take the Google CDN for jQuery for example – no matter how improbable, you take this down and half the web’s apps stop functioning. Google.com is probably the biggest single point of failure the world of software has ever known. Not good! Many developers think that if they can’t rely on Google, then who can they rely on? The point is that they should not rely on any single service, and the more single points you rely on, the worse it gets! It’s almost like we have forgotten the principles behind (and the redundancy built into) the Internet – a network based on the nuclear-war-tolerant ARPANET.

I believe it’s time to start looking at a new more robust, decentralised and distributed web model, and I think peer-to-peer (P2P) is a big part of that. Many clients, doubling as servers should be able to deliver the robustness we require, specifically SPOFs would be eliminated. Imagine Wikileaks as a P2P app – if we eliminate the single central server URL mode and something happens to wikileaks.com, the web app continues to function regardless. It’s no coincidence that Wikileaks chose to distribute documents on bittorrent. Another benefit of a distributed architecture is that it scales better, we already know this of course – it’s one of the benefits of client-side code. Imagine Twitter as a P2P web app, imagine how much easier it could scale with the bulk of processing distributed amongst its clients. I think we could all benefit hugely from a comprehensive web based P2P standard, essentially this boils down to browser-built-in web servers using an established form of inter-browser communication.

I’m going to go out on a limb here for a moment, bear with me as I explore the possibilities. Let’s start with a what if. What if, in addition to being able to download client-side JavaScript into your cache manifest you could also download server-side JavaScript? After all what is the server-side now, if not little more than a data/comms broker? Considering the growing popularity of NoSQL solutions and server-side JavaScript (SSJS) it seems to me that it wouldn’t take much to be able to run SSJS inside the browser, granted you may want to deliver a slightly different version than runs on the server, and perhaps this is little more than an intermediary measure. We already have Indexed Database API on the client, if we standardise the data storage API part of the equation, we’re almost there, aren’t we?

So let’s go through a quick example – let’s take Twitter again, how would this all work? Say we visit a twitter.com that detects our P2P capabilities and redirects to p2p.twitter.com, after the page has loaded we download the SSJS and start running it on our local server. We may also grab some information about which other peers are running Twitter. Assuming the P2P is done right, almost immediately we are independent of twitter.com, we’ve got rid of our SPOF. \o/ At the same time if we choose to run offline we have enough data and logic to be able to do all the things we would usually do, bar transmit and receive. Significantly load on twitter.com could be greatly reduced by running both server-side and client-side code locally. To P2P browsers, twitter.com would become little more than a tracker or a source of updates. Also let’s not forget, things run faster locally ![]()

Admittedly the difference between server-side and client-side JS does become a little blurred at this point. But as an intermediary measure at least, it could be useful to maintain this distinction as it would provide a migration path for web apps to take. For older browsers you can still rely on the single server method while providing for P2P browsers. Another interesting beneficial side-effect is that perhaps we can bring the power of ‘view-source’ to server-side code. If you can download it you can surely view it.

The thing that I am most unsure about is what role HTTP plays in all this. It might be best to take an evolutionary approach to such a revolutionary change. We could continue to use HTTP to communicate between clients and servers, allowing the many benefits of a RESTful architecture, restricting the use of a more efficient TCP based comms to required real-time ‘push’ aspects of applications.

It seems to me that with initiatives such as Opera Unite (where P2P and a web server are already being built into the browser) and other browser makers looking to do the same, this is the direction in which web apps are heading. There are too many advantages to ignore: robustness, speed, scalability – these are very desirable things.

I guess what is needed now is some deep and rigorous discussion and if considered feasible, standards to be adopted so all browsers can move forward with a common approach for implementation. Security will obviously feature highly as part of these discussions and so will common library re-use on the client.

If web apps are going to compete with native apps, it seems that they need to be able to do everything native apps do and that includes P2P communication and enhanced offline capability, it just so happens that these two important aspects seem to be linked. Once suitable mechanisms and standards are in place, the fact that you can write web applications that are equivalent or even superior to native apps, using one common approach for all platforms, is likely to be the killer differentiator.

Thanks to @bluetezza, @quinnirill, @getify, @felixge, @trygve_lie, @brucel and @chrisblizzard for their inspiration and feedback.

Further reading : Freedom In the Cloud: Software Freedom, Privacy, and Security for Web 2.0 and Cloud Computing, The Foundation for P2P Alternatives, Social Networking in the cloud – Diaspora to challenge facebook, The P2P Web (part one)

A Simple and Robust jQuery 1.4 CDN Failover in One Line

Google, Yahoo, Microsoft and others host a variety of JavaScript libraries on their Content Delivery Networks (CDN) – the most popular of these libraries being jQuery. But it’s not until the release of jQuery 1.4 that we were able to create a robust failover solution should the CDN not be available.

First off, lets look into why you might want to use a CDN for your JavaScript libraries:

1. Caching – The chances are the person already has the resource cached from another website that linked to it.

2. Reduced Latency – For the vast majority the CDN is very likely to be much faster and closer than your own server.

3. Parallelism – There is a limit to the number of simultaneously connections you can make to the same server. By offloading resource to the CDN you free up a connection.

4. Cleanliness – Serving your static content from another domain can help ensure that you don’t get unnecessary cookies coming back with the request.

All these aspects are likely to add up to better performance for your website – something we should all be striving for.

For more discussion of this issue I highly recommend this article from Billy Hoffman : Should You Use JavaScript Library CDNs? which includes arguments for and against.

The fly in the ointment is that we are introducing another point of failure.

Major CDNs do occasionally experience outages and when that happens this means that potentially all the sites relying on that CDN go down too. So far this has happened fairly infrequently but it is always good practice to keep your points of failure to a minimum or at least provide a failover. A failover being a backup method you use if your primary method fails.

I started looking at a failover solution for loading jQuery1.3.2 from a CDN some months ago. Unfortunately jQuery 1.3.2 doesn’t recognise that the DOM is ready if it is added to a page AFTER the page has loaded. This made making a simple but robust JavaScript failover solution more difficult as the possibility existed for a race condition to occur with jQuery loading after the page is ready. Alternative methods that relied on blocking the page load and retrying an alternative source if the primary returned a 404 (page not found) error code were complicated by the fact that the Opera browser didn’t fire the "load" event in this situation, so when creating a cross-browser solution it was difficult to move on to the backup resource.

In short, writing a robust failover solution wasn’t easy and would consume significant resource. It was doubtful that the benefit would justify the expense.

The good news is that jQuery1.4 now checks for the dom-ready state and doesn’t just rely on the dom-ready event to detect when the DOM is ready, meaning that we can now use a simpler solution with more confidence.

Note that importantly the dom-ready state is now implemented in Firefox 3.6, bringing it in line with Chrome, Safari, Opera and Internet Explorer. This then gives us great browser coverage.

So now in order to provide an acceptably robust failover solution all you need do is include your link to the CDN hosted version of jQuery as usual :

<script type="text/javascript" src="http://ajax.googleapis.com/ajax/libs/jquery/1.4/jquery.min.js"></script>

and add some JS inline, similar to this:

<script type="text/javascript">

if (typeof jQuery === 'undefined') {

var e = document.createElement('script');

e.src = '/local/jquery-1.4.min.js';

e.type='text/javascript';

document.getElementsByTagName("head")[0].appendChild(e);

}

</script>

You are then free to load your own JavaScript as usual:

<script type="text/javascript" src="my.js"></script>

It should be well noted however that the above approach does not "defer" execution of other script loading (such as jQuery plugins) or script-execution (such as inline JavaScript) until after jQuery has fully loaded. Because the fallbacks rely on appending script tags, further script tags after it in the markup would load/execute immediately and not wait for the local jQuery to load, which could cause dependency errors.

One such solution is:

<script type="text/javascript">

if (typeof jQuery === 'undefined')

document.write('script type="text/javascript" src="/local/jquery-1.4.min.js"><\/script>');

</script>

Not quite as neat perhaps, but crucially if you use a document.write() you will block other JavaScript included lower in the page from loading and executing.

Alternatively you can use Getify‘s excellent JavaScript Loading and Blocking library LABjs to create a more solid solution, something like this :

<script type="text/javascript" src="LAB.js">

<script type="text/javascript">

function loadDependencies(){

$LAB.script("jquery-ui.js").script("jquery.myplugin.js").wait(...);

}

$LAB

.script("http://ajax.googleapis.com/ajax/libs/jquery/1.4/jquery.min.js").wait(function(){

if (typeof window.jQuery === "undefined")

// first load failed, load local fallback, then dependencies

$LAB.script("/local/jquery-1.4.min.js").wait(loadDependencies);

else

// first load was a success, proceed to loading dependencies.

loadDependencies();

});

</script>

Note that since the dom-ready state is only available in Firefox 3.6 + <script> only solutions without something like LABjs are still not guaranteed to work well with versions of Firefox 3.5 and below and even with LABjs only with jQuery 1.4 (and above).

This is because LABjs contains a patch to add the missing "document.readyState" to those older Firefox browsers, so that when jQuery comes in, it sees the property with the correct value and dom-ready works as expected.

If you don’t want to use LABjs you could implement a similar patch like so:

<script type="text/javascript">

(function(d,r,c,addEvent,domLoaded,handler){

if (d[r] == null && d[addEvent]){

d[r] = "loading";

d[addEvent](domLoaded,handler = function(){

d.removeEventListener(domLoaded,handler,false);

d[r] = c;

},false);

}

})(window.document,"readyState","complete","addEventListener","DOMContentLoaded");

</script>

(Adapted from a hack suggested by Andrea Giammarchi.)

So far we have talked about CDN failure but what happens if the CDN is simply taking a long time to respond? The page-load will be delayed for that whole time before proceeding. To avoid this situation some sort of time based solution is required.

Using LABjs, you could construct a (somewhat more elaborate) solution that would also deal with the timeout issue:

<script type="text/javascript" src="LAB.js"></script>

<script type="text/javascript">

function test_jQuery() { jQuery(""); }

function success_jQuery() { alert("jQuery is loaded!");

var successfully_loaded = false;

function loadOrFallback(scripts,idx) {

function testAndFallback() {

clearTimeout(fallback_timeout);

if (successfully_loaded) return; // already loaded successfully, so just bail

try {

scripts[idx].test();

successfully_loaded = true; // won't execute if the previous "test" fails

scripts[idx].success();

} catch(err) {

if (idx < scripts.length-1) loadOrFallback(scripts,idx+1);

}

}

if (idx == null) idx = 0;

$LAB.script(scripts[idx].src).wait(testAndFallback);

var fallback_timeout = setTimeout(testAndFallback,10*1000); // only wait 10 seconds

}

loadOrFallback([

{src:"http://ajax.googleapis.com/ajax/libs/jquery/1.4/jquery.min.js", test:test_jQuery, success:success_jQuery),

{src:"/local/jquery-1.4.min.js", test:test_jQuery, success:success_jQuery}

]);

</script>

To sum up – creating a truly robust failover solution is not simple. We need to consider browser incompatabilities, document states and events, dependencies, deferred loading and time-outs! Thankfully tools like LABjs exist to help us ease the pain by giving us complete control over the loading of our JavaScript files. Having said that, you may find that in many cases the <script> only solutions may be good enough for your needs.

Either way my hope is that these methods and techniques provide you with the means to implement a robust and efficient failover mechanism in very few bytes.

A big thanks to Kyle Simpson, John Resig, Phil Dokas, Aaoran Saray and Andrea Giammarchi for the inspiration and information for this post.

Related articles / resources:

3.http://aaronsaray.com/blog/2009/11/30/auto-failover-for-cdn-based-javascript/

4.http://snipt.net/pdokas/load-jquery-even-if-the-google-cdn-is-down/

5.http://stackoverflow.com/questions/1447184/microsoft-cdn-for-jquery-or-google-cdn

6.http://webreflection.blogspot.com/2009/11/195-chars-to-help-lazy-loading.html

7.http://zoompf.com/blog/2010/01/should-you-use-javascript-library-cdns/

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design