Hyper Audio

The Hyperaudio Pad – Next Steps and Media Literacy

By Mark Boas

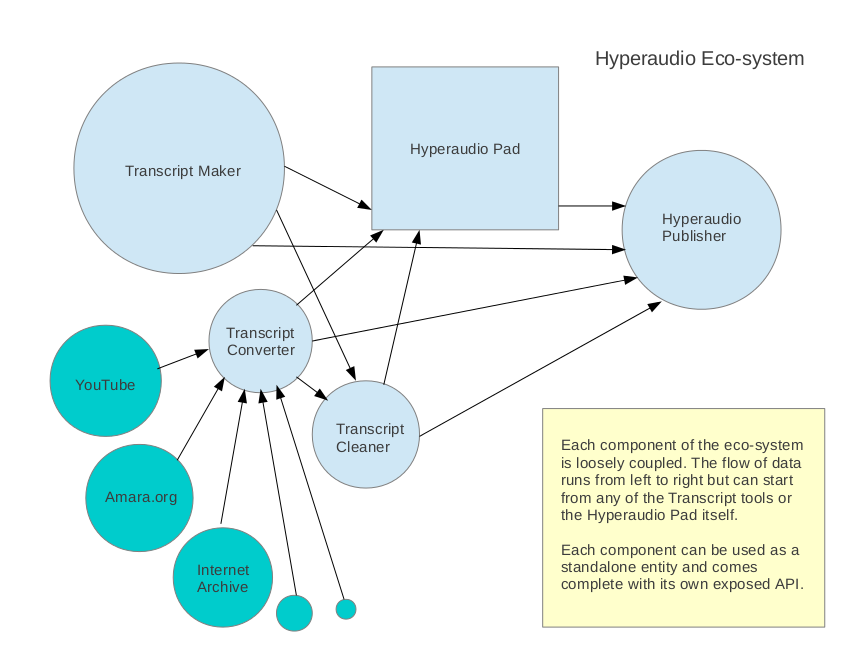

Hyperaudio is a term coined by Henrik Moltke. After discovering we had similar interests we started working on the concept a while back, resulting in a few demos such as the Radiolab demo for WNYC. That was a couple of years ago now. Since then I have been working with my colleague Mark Panaghiston on various Hyperaudio demos and thinking about how we can create an suite of tools I imaginatively call the Hyperaudio Ecosystem, at the center of that ecosystem sits the Hyperaudio Pad.

What is Hyperaudio?

But hey, let’s back up a bit. What is this Hyperaudio of which I speak? Simply put, Hyperaudio aims to do for audio what hypertext did for text – that is to integrate audio fully into the web experience. I wrote more about that here.

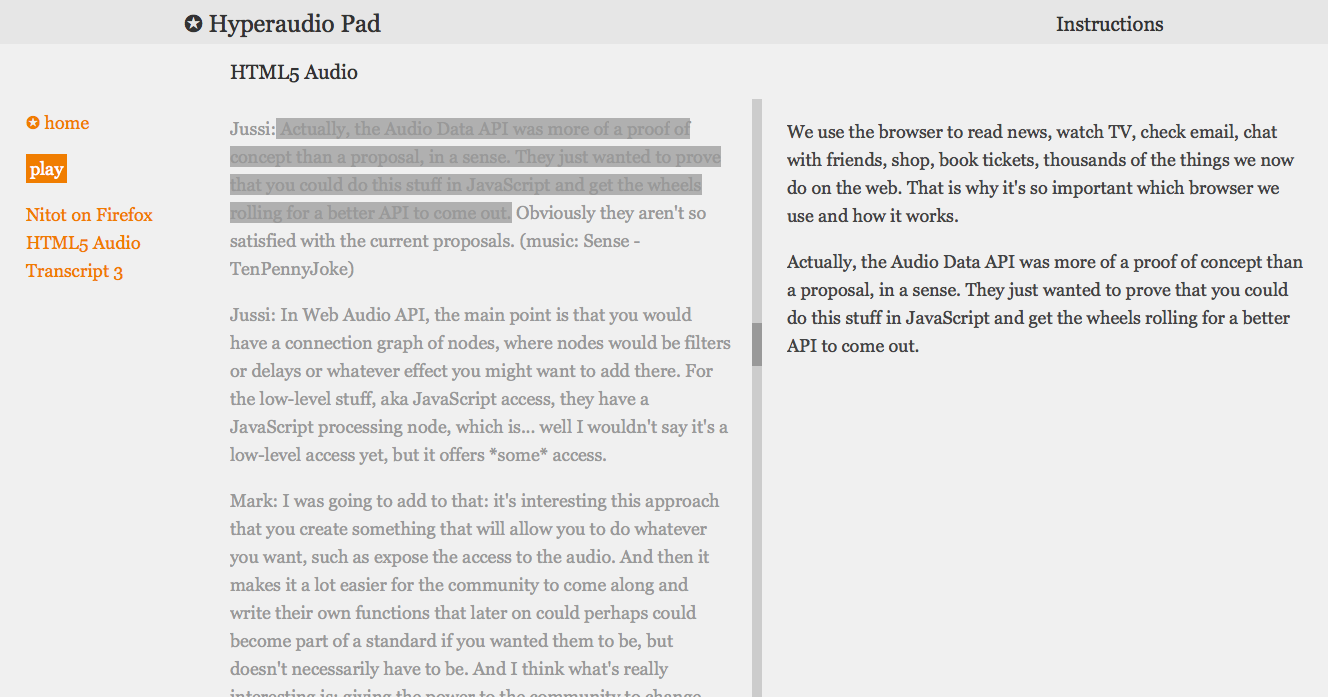

The Hyperaudio Pad is a tool that enables people to build up or remix audio and video using the underlying timed-transcripts. At the moment we’re concentrating on transcribing the spoken word.

Over the last seven days or so me and the other Mark have been working fairly solidly on taking the Hyperaudio Pad to the next level. It’s great to work with Mark P for several reasons. Obviously as key author of jPlayer he knows pretty much all there is to know about web based media, but also although we are both developers we are in many ways diametrically opposed. Mark is keen on code quality and doing things properly and I just want to get things out there. Happily the compromise we arrive at when we work together is a usually a good one. Mark is coming around to minimal-viable solutions and I have to admit that doing things right can save time in the long-run.

In fact there is a world of difference between earlier versions of the Hyperaudio Pad and that I largely developed and the version we have now after a week of collaboration.

Putting our weird Renée and Renato relationship aside for a bit, let’s talk a little about what it is that the Hyperaudio Pad is actually meant to do.

The Power of the Remix

OK, so key aims here are really to encourage the remixing of media in a new and refreshingly easy way – in doing so promote media literacy. On the subject of remixing at its importance in counter-culture I’d encourage you to check out RIP! : A Remix Manifesto and the excellent Everything is a Remix series.

Actually Brett Gaylor of Remix Manifesto fame is doing similar work by leading the effort building sequencing into Popcorn Maker. We’re approaching the same objective from completely different angles.

Although we use the Popcorn.js library at the heart of the Hyperaudio Pad, we use text to describe and represent media content. This may seem counter intuitive but actually transcripts can be a great way to navigate media content. Transcripts help break media out of it’s black box and we can scan and search through it relatively quickly. The Hyperaudio Pad borrows from the word-processor paradigm allowing people to copy blocks of transcript and associated media and further, allowing them to describe transitions, effects, adjust volumes using natural language that resemble editing directions in a script.

Media Literacy

As an example of some transitions and effects you can currently insert between clips:

[fade through black over 2 seconds, apply effects nightvision, scanlines, tvglitch] currently works.

which is equivalent to:

[fade black 2 apply nightvision scanlines tvglitch]

but we want to encourage the user to be descriptive as these directions are both human and computer readable.

Other effects include : ascii, colorcube, emboss, invert, noise, ripple, scanlines, sepia, sketch, vignette

This system will evolve of course, and we hope to allow stuff like:

[change volume to 50% over 3 seconds, change brightness to 20% over 2 seconds, play track 'love me do' from 30 seconds to 35 seconds, change track volume from 0 to 80% over 2 seconds]

(stuff like that)

Transcript snippets, combined with text described transitions and effects act as a kind of source code for the media.

Weak References

As cuts are nothing more than weak references to media files, start and end times and effects that are simply layered — in real-time — over the top, we can make additive remixes as easily as we can subtractive ones, that is to say, somebody could take a remix and enhance it by peeling back a specific snippet to reveal more, thereby adding to the piece.

In fact a key goal here is to allow remixing of remixes.

So a bit about more about the technology powering this. Effects are handled by Brian Chirls‘ seriously cool Seriously.js – a comprehensive video compositing library. The great thing about Seriously.js is that it is a modular system. We can add effects (and load their code dynamically) as we go. I also look forward to using some of our experience gained with the Web Audio API to apply realtime audio effects. The wider vision is to make a system that will take raw media, transcribe it and allow you to do all the things you could do with a professional video editing package.

We’ve got plenty more to do, certainly some bugs to iron out, but we’re steadily approaching beta. A lot has been restructured behind the scenes, giving us a more stable platform to build on and more quickly enhance. The question I posed and answered on Twitter. What do you call something between alpha and beta? Alpha.

Going Forward

We still plan to add the following features in the near future :

- alter brightness, contrast, saturation etc

- control and fade volume

- add audio track that can be played simultaneously or sequentially

- add effects/transitions in paragraph etc

- allow editing right down to the word, delete words

- allow fine tuning of start and end points

- allow the playback and sharing of fullscreen video remixes with an option to see the underlying ‘source code’.

If you want to play around yourself you can find the latest demo here.

Sourcecode is on GitHub (I should get around to adding an MIT license or something)

Or you can watch a screen recording of where we were at as of yesterday. Apologies for the choppy quality, oh and the background music, it seemed a good idea at the time.

Breaking Out – Web Audio and Perceptive Media

By Mark Boas

Sometimes when we take on projects we don’t really know what we’re letting ourselves in for. To fully know, we’d spec things out to the ninth degree and who wants to or has time for that? Well people like NASA do, but we don’t.

When we were given the opportunity to work on something called Perceptive Media all I saw was a colourful but amorphous form ahead and although it was explained to me (as well it could be) I still didn’t have a real clue of what it would end up being and crucially what was involved in making it a reality.

But hey it’s the BBC right? You don’t often get to work with the BBC – you know that BBC you spent so much time watching and listening to when you were growing up. The same BBC who make Doctor Who ? – That BBC!

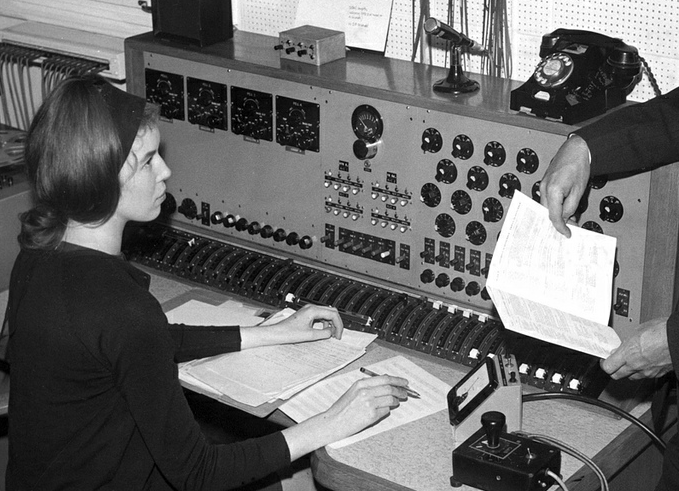

Time-lords aside (or not actually as the case may be), being a bit of an audio-geek I’ve always been fascinated by the BBC’s audio output especially their Radiophonic Workshop especially Delia Derbyshire’s work and this was an audio gig coming from the R&D department of the same organisation. How could we turn it down?

So I jumped at the chance without really knowing what lay in store, which incidentally is very unfair on my colleague who ended up doing the lion’s share of the work.

The brief went something like this : We want to create a web-audio based demo that will adjust its content to the listener, based on information we can ascertain about them. Oh and we want it to sound as natural as possible so that the listener may not suspect that the content is being tailored. I soon found out that this involved generating audio on-the-fly and applying convolution reverb and other effects so that audio sounded natural in various environments. What this all meant is that we needed to use an advanced audio API. Now I’ve had fun investigating advanced audio APIs before, they differ from the standard audio APIs by being designed to allow you not only to play audio files, but to generate and alter audio.

This is exciting because we are finally getting around to doing stuff with audio that we have been doing with text for years. It also crosses over with something I’m looking at called Hyperaudio.

Experimentation

So with this cross-over in mind, I felt I could manage to find the time to experiment and create some proof of concept demos and this lead me to try out the following libraries:

The Speak.js demo pretty much demonstrates what it can do. You put text in and speech comes out. The library itself is ported from eSpeak using something called Emscripten and actually allows you to generate audio pretty much in real-time by constructing a data URI in WAV format.

We wanted it to work well on all browsers that support some advanced audio API. Firefox’s Audio Data API adopts a more low-level approach but in theory at least, you should be able to do anything that Webkit’s Web Audio API does, but with raw JavaScript. AudioLib.js provides the libraries to do this and also abstracts the differences between the two APIs so that you can write one set of code for both.

Unfortunately time and budget restrictions meant that we couldn’t create the version we wanted to make – a version that would work similarly in all browsers that provided an advanced audio API. As it seems that the future W3C standard for advanced audio will be based on the Web Audio API we decided to concentrate on that.

Personalisation

The data we use to personalise the broadcast comes from a number of sources. The main differentiator is the listener’s location which we use via the geo-location API to determine, local weather, radio streams and landmarks which are then subtly inserted into the audio stream. The only restriction is that you must be in the UK to really encounter the differences – this is partly due to the fact that we use some BBC resources that are only available for the UK but also so that we can keep the data manageable for what, after all, is just a demo.

The second source of information about the listener came from a slightly more sinister place. For fun I’d been working with a good friend of mine Matteo Spinelli on a project called Underpants and while looking at the issue of browser traceability across websites we figured out how to determine which social networks a browser is logged into. Cross-over struck once more and we used this technique to personalise the part where our outdoor-challenged hero is urged to log out of her favourite social network and leave the apartment.

Try the demo!

Advanced Web Audio

So what changes between the version of the broadcast that uses the Web Audio API and the fallback version that doesn’t? Well there are a number of factors, some of them quite subtle. Speak.js outputs a robot style voice which is fine for our use as the electronic voice of the lift. But we wanted to make sure it would fit in properly to the various environments in which it was set. To do this we created something called a convolution-reverb. In short, a this reverb allows us to apply the right sort of audio ambiance to a sound. So if a sound is coming from a lift we apply a lift type echo. We also apply the same ‘echo’ to the streamed radio broadcast that is played at a certain point in the broadcast.

The fact that we are using an advanced audio API also enables us to add various other effects to other pieces of audio. However we soon found that we needed to be sensible with our audio design, since convolutions with the Web Audio API does take a up a fair amount of CPU. During a development error, a unique convolution was used for each sound, this was found to start failing at around the 14th.

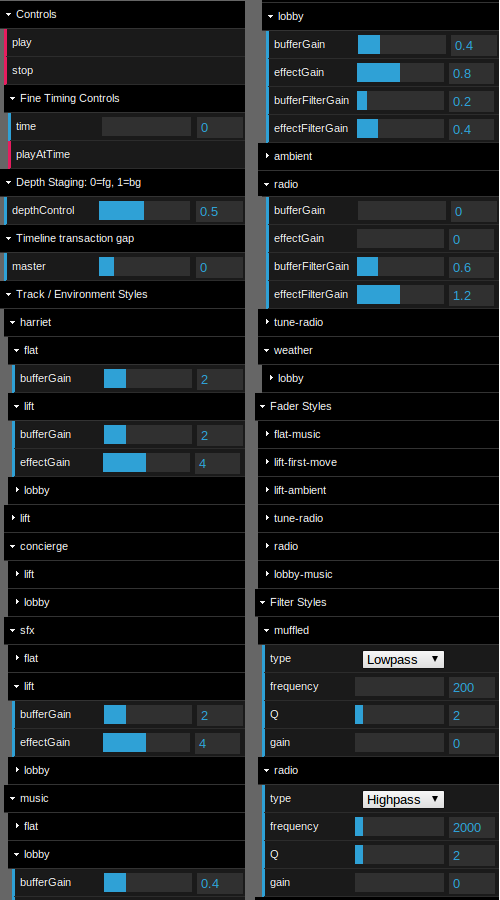

We also made use of audio filters, for example the radio podcast uses a high-pass filter applied to it which makes it sound ‘tinny’, another example is when Harriet opens the her apartment door at the start we apply both filters and faders. The Web Audio API uses a node based approach which means that you can feed the results of one effect into the next so we can apply filters, faders and convolutions to any audio source. To achieve all of this we made heavy use of Web Audio API’s AudioParam which allows nearly any attribute to be changed using handy linear transform effects – we used this to fade in and out, or cross-fade between filtered and unfiltered outputs.

So the Web Audio API version applies filters, faders and convolutions to the audio whereas the standard HTML5 audio versions do not. That’s not to say that given enough time we couldn’t have achieved the same effect using the Audio Data API included in Firefox. But since the new audio standard is slated to be largely based on the Web Audio API it was decided for the purposes of this demo to concentrate our energy in this area.

Once we’d got the core of the functionality working, we set to work on creating a control panel to allow us to tweak every single one of the volumes, filters, faders and convolutions.

We wanted to be able to demonstrate to editors of audio how we could tweak pace, reverb and sound-effects in real-time and although requiring a complete code re-factor we hope that the fact that the whole thing is pretty-much customisable makes this a powerful demo and will be useful to others as well as ourselves who are dabbling in this area.

So this is a good time to mention that all the source-code is open source and that you can grab it from the following GitHub location https://github.com/happyworm/PerceptiveMedia.

Conclusions

I think this was definitely an interesting and worthwhile experiment. However as its aim was to be subtle, it purposely does not make immediately clear the potential of the technology. Technically what we are able to achieve turned out to be a kind of audio framework to allow the ability to create and tweak audio as it’s being played. This is useful to producers of audio to see how effects and timings can alter the experience and is especially useful for applications such as games where perhaps you want to give your sound-effects context. I also feel that these techniques could be used for applications such as dynamic story-telling. My daughter — all too often — asks me to tell her a story featuring robots, dinosaurs and goblins and all too often I fall back on the same old principles and formulas of children’s story-telling, the rule of three and so on. Post happy-ever-after she often wants me to add to the story, getting me to fill-in or clarify some of the details. It’s not a huge stretch to imagine that we could create dynamic storytelling applications for kids. A pinch of AI here, some personalisation there and a heavy dose of randomness might just be enough to keep them happy for a bit.

So there we have it, one small step closer to the old Star Trek computer (Did Doctor Who’s have a voice interface? I forget). We’ve already seen the application of voice input with software such as Siri. It shouldn’t be too much longer until audio interfaces start to become common-place, with so much current emphasis on the visual I think this could be quite refreshing.

Epilogue

Question. What’s harder to debug than an intermittent bug? Answer. An intermittent bug that only manifests itself when you deploy to the server. Crazy I know and totally unexpected to us and for this reason you may see issues when running on Firefox (but not Opera). Being supporters of both Mozilla and Firefox we were much dismayed by this bug and spent a significant amount of time trying to get to the bottom of it. Unfortunately due to its nature we were only able to put in a loose bug-report If anybody wants to help us solve this issue please feel free to take a look, even if it’s just to download the application from GitHub and verify that it works locally for you.

Thanks then to Ian Forrester and Tony Churnside for the opportunity to work with them and their team at BBC R&D, also of course Sarah Glenister for the excellent script and Angie Chan for the great artwork. Jussi Kalliokoski for helping us work with AudioLib.js But most of all I want to thank my colleague Mark Panaghiston for working tirelessly behind the scenes not only on the significantly challenging audio aspects of the project but also go above and beyond in integrating the visual aspects and even sourcing and setting up the hosting.

Related Articles

Writing for Perceptive Media

Illustrations for BBC R&D’s Perceptive Media Demo: Breaking Out

What is Perceptive Media?

BBC demonstrates revolutionary ‘perceptive media’

Perceptive Media Launch at Social Media Cafe Manchester

The BBC unveils its first ‘Perceptive Media’ experiment – and you can try it now

What is Perceptive Media?

Hyperaudio at the Mozilla Festival

This year has run contrary to previous years of my life in the sense that it seems to have lasted much longer than the year before. This may have something to do with a small addition to the family that arrived in March and the consequent ramping up of waking hours, but I prefer to think it’s because things are moving so quickly in that wonderful place we call the worldwide web, that it seems that too much has happened to compress into a year.

It was only a year ago for example, when I started tinkering with jPlayer to couple text and audio and was asked to show off a very small demo at the first Mozilla Festival in Barcelona last year.

A few months later I was introduced to and became involved with something known as Hyperaudio and was given the opportunity to create a couple of proof-of-concept demos : Denmark Radio’s Hyperdisken Demo and the Radiolab/Soundcloud collaboration ( a shout-out to Henrik Moltke for doing much of the groundwork for these demos and my colleagues at Happyworm without which they just wouldn’t have been possible).

Further on through the arc of this epic year, I took part in the Mozilla Knight Journalism challenge where I was encouraged to research and blog some ideas on a tool I called the Hyperaudio Pad. Happily I was flown over to Berlin with other like minded MoJo peeps, for a week of intense discussion, collaboration and hacking and managed to get the first semblance of an actual product out of the door.

Which brings me in a round-about way to this year’s Mozilla Festival, where I will again be demoing and chatting about the coupling of text and media and the other things that Hyperaudio is (or could be) about. This all at the Science Fair on Friday, 4th of November and a Hyperaudio Workshop / Design Challenge on Saturday, 5th.

The Science Fair will be all about demos, chatting to people interested in the general Hyperaudio concept and making sure I don’t spill my drink over my laptop. Meanwhile in the workshop we hope to put the Hyperaudio Pad through it’s paces and actually make something with it, while gathering ideas and maybe hacking on some of them into the bargain. It should be a fun mix of hacks, hackers, designers, audio buffs and basically anybody curious enough to be involved. The session will be fairly free flow but the plan so far is to:

- Briefly chat about and demo the Hyperaudio concept, what it can do and with whom we can collaborate.

- Breakout into groups to come up with new ideas, applications and designs.

- Reconvene and talk about the group’s ideas.

- Breakout into groups to work on these ideas, whether it is creating a programme, UI mockups, story-boarding functionality or even developing small demos.

- Creating something we can show other people – hopefully a program created with the Hyperaudio Pad but also some new ideas.

Hopefully we can cram this all into 3 hours. Folk from the BBC, Universal Subtitles, Sourcefabric and other cool people are planning to be in attendance, which should make for some interesting crossovers and fantastic opportunities to consult and maybe even influence organisations in a position to use the stuff we’re making. Additionally the BBC are providing us with some fantastic media to work with.

All will be revealed over the weekend, book your place and get your eyes and ears ready to be part of the Hyperaudio experience.

The Hyperaudio Pad – a Software Product Proposal

Imagine

Imagine that making your own video or audio news programs was really easy.

Imagine if you could pull together news stories from various sources, combine them and publish the results in minutes.

Imagine that the story you made came complete with a transcript, was fully accessible and could be picked up by search engines.

Imagine that you could easily embed your story into a web page and that others could incorporate your story into new stories.

Imagine that viewers of you story could share any part of it in seconds.

Imagine all this and you are imagining the functionality of the Hyperaudio Pad.

Listen to a use case.

Watch the screencast.

Hypertranscripts

Currently, the process of putting together a media program, the process will probably involve some fairly complex audio/video editing software. The result – a fairly ‘static’ representation. The whole process is time consuming, wasteful and inflexible.

By changing the way we think about media, we can create a new way of manipulating it. The Hyperaudio Pad allows quick manipulation of media and takes advantage of a form of media representation referred to as a hypertranscript.

When we tell a story we invariably use the spoken word, the spoken word can be transcribed, and once transcribed can be used as an accurate reference to the media it is associated with.

Hypertranscripts are part of a broader concept dubbed Hyperaudio by Henrik Moltke, they are transcripts hyper-linked to the media they represent, a type of fine-grained subtitle, they are separate entities from the media they describe, existing as a word-level aligned references expressed in HTML.

Tools and services exist to create Hypertranscripts, word level timing can even be approximated from subtitles. In the past I have used libraries such as Popcorn.js, jPlayer and data from the Universal Subtitles project.

The good news is that Hypertranscripts already exist, here are a few examples:

Screencast [youtube] of the Danish Radio Demo

Screencast [youtube] of the Radiolab Demo.

Quick and simple demo I put together.

Demos by others include: Minnesota Public Radio Demo, Voxallead News and Liris Interactive Transcript

Using Hypertranscripts we can:

- Navigate through the media via the text.

- Select and play parts of the media by highlighting the relevant parts of the text.

- Share parts of the media creating URLs that contain start and end points.

- Make our media more discoverable (via search engines)

- Make our media more accessible.

- More easily visualize the media.

- Combine, embed and easily integrate media (using simple tools)

The Hyperaudio Pad

The Hyperaudio Pad is a tool to allow users to easily assemble spoken word based audio or video from Hypertranscripts.

It will allow users to create their media by simply copying and pasting text from existing transcripts into a new transcript. These Hypertranscripts link to the actual media that they transcribe, so when parts of the transcripts are copied the references to their associated media are also copied.

Note that the media itself is not copied, just references to parts of it, the result is mash-up of video or audio and an associated representative transcript.

The tool will be :

- Web based, cross-browser, cross-platform (work on mobile devices) and open source.

- Simply presented, taking cues from minimalist distraction-free tools such as iAWriter.

- Intuitive and extremely easy to use, taking advantage of the text editing paradigm.

- Extensible – written in such a way that it can easily be built upon.

The interface should be clear and minimal, the user can search for and choose from existing hypertranscripts, open them, play them and copy parts of them to their ‘document’ all from within the same page.

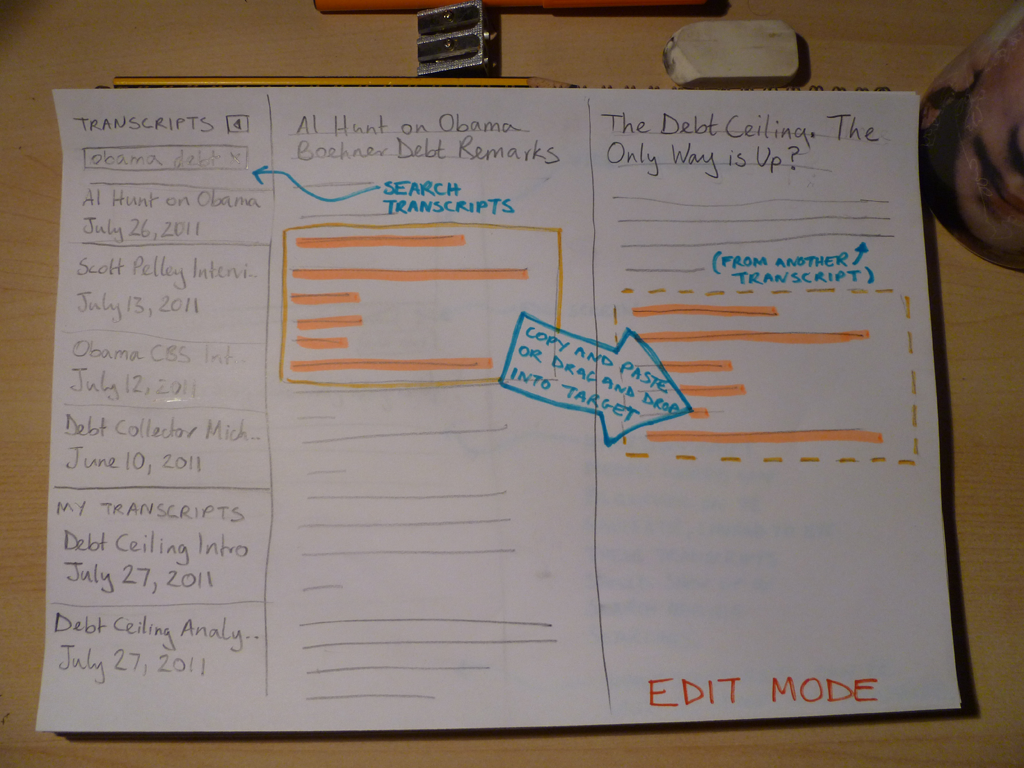

Edit Mode

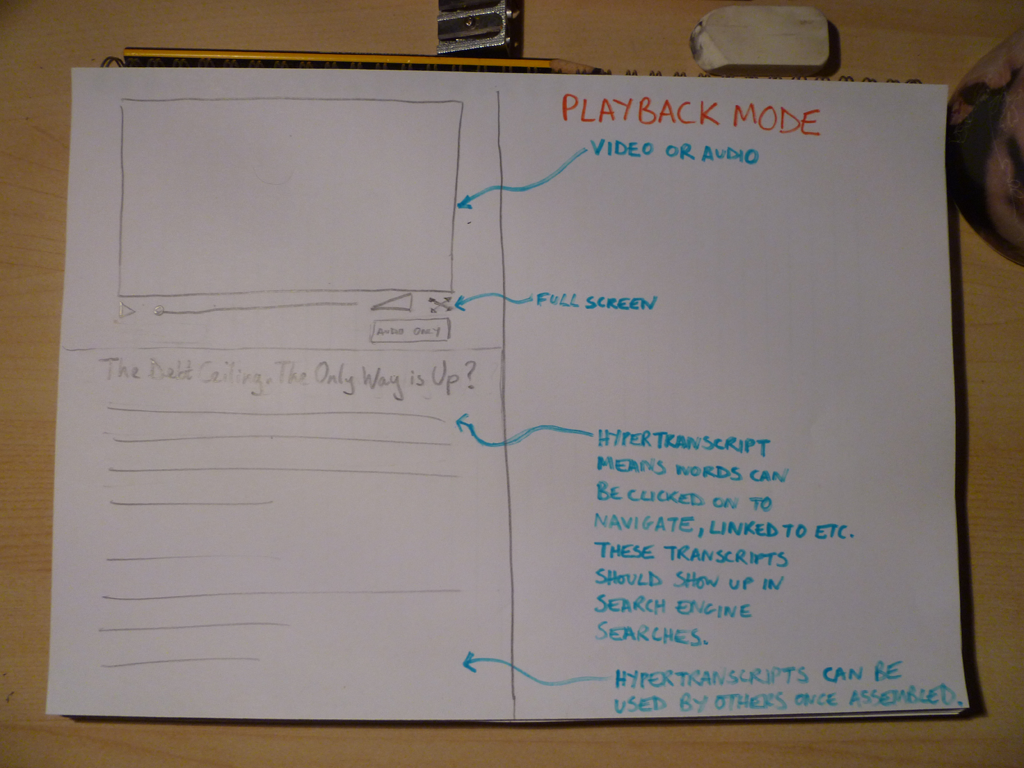

Playback Mode

Who is this tool for?

- Newsroom journalists who need to very quickly assemble media from different existing sources and perhaps adapt the result as news unfurls.

- Podcasters, citizen journalists and mediabloggers who want a free and easy way of putting together media programs or newscasts.

- Anyone who wants their resulting media programs to include a transcript for increased accessibility and visibility.

- Anybody who is happy for their results to be taken apart, re-combined or referenced by others.

How does it work ?

Hypertranscripts are essentially defined in HTML. The simplest possible example for the use by a tool such as the Hyperaudio Pad could be:

WORD-FROM-THE-TRANSCRIPT

Example:

burgeoning

Note, we can add to this format should we wish to include meta and other data, for example :

WORD-FROM-THE-TRANSCRIPT

(from Julien Doran‘s MetaFragment proposal document)

Transcripts will contain a series of these marked up words each individual word containing enough data to describe it’s associated media and at which time in the media it occurs.

Note that copying the Hypertranscript into any text editor will result in valid HTML and so can be used in other tools and applications external to the Hyperaudio Pad.

It is likely that the user’s resulting transcript will reference several different pieces of media and so it may beneficial for the application to ‘load’ and possibly cache the various media sources in advance for smoothest possible playback. We can achieve this by parsing the transcript after every save.

Future developments could include a simple scripting language that users can insert between transcripts to make transitions smoother and even the possibility to add background music or sound effects for example:

[fade out over 5 seconds]

or

[background fade in 'threatening music' over 3 seconds]

and then at the bottom

[reference 'threatening music' http://soundcloud.com/some.mp3]

You may say I’m a Dreamer

In order to start using the Hyperaudio Pad to its full potential we first need our media to be transcribed and to do this we need to make tools that make this easier and if possible free. However I believe that hypertranscripts deliver so many benefits that the incentive to transcribe media is high.

The Hyperaudio Pad is just one of many tools that could be built on the underlying Hypertranscript platform. To build this platform we should collaborate with others to :

- establish a standard markup for transcripts

- make the platform easy to build upon and enhance as new technologies become viable

- create a community with a pioneering spirit

- develop an ecosystem to facilitate the creation of a toolkit

During the course of this project it’s been a pleasure to find many other participants interested in the general theme of describing/transcribing media and utilizing the result. Over the last couple of weeks I have been collaborating with Julien Dorra, Samuel Huron, Nicholas Doiron and Shaminder Dulai. The excitement has been palpable and although only communicating virtually it felt like we were sparking off each other.

So I guess at least as far as this dream is concerned, I’m not the only one. ![]()

Introducing the Hyperaudio Pad (working title)

Last week as part of the Mozilla News Lab, I took part in webinars with Shazna Nessa – Director of Interactive at the Associated Press in New York, Mohamed Nanabhay – an internet entrepreneur and Head of Online at Al Jazeera English and Oliver Reichenstein – CEO of iA (Information Architects, Inc.).

I have a few ideas on how we can create tools to help journalists. I mean journalists in the broadest sense – casual bloggers as well as hacks working for large news organizations. In previous weeks I have been in deep absorption of all the fantastic and varied information coming my way. Last week things started to fall into place. A seed of an idea that I’ve had at the back of my mind for some time pushed its way to the front and started to evolve.

Something that cropped up time and again was that if you are going to create tools for journalists, you should try and make them as easy to use as possible. The idea I hope to run with is a simple tool to allow users to assemble audio or video programs from different sources by using a paradigm that most people are already familiar with. I hope to build on my work something I’ve called hypertranscripts which strongly couple text and the spoken word in a way that is easily navigable and shareable.

The Problem

Editing, compiling and assembling audio or video usually requires fairly complex tools, this is compounded by the fact that it’s very difficult to ascertain the content of the media without actually playing through it.

The Solution?

I propose that we step back and consider other ways of representing this media content. In the case of journalistic pieces, this content usually includes the spoken word which we can represent using text by transcribing it. My idea is to use the text to represent the content and allow that text to be copied, pasted, dragged and dropped from document to document with associated media intact. The documents will take the form of hypertranscripts and this assemblage will all work within the context of my proposed application, going under the working title of the Hyperaudio Pad. (Suggestions welcome!) Note that the pasting of any content into a standard editor will result in hypertranscripted content that could exist largely independently of the application itself.

Some examples of hypertranscripts can be found in a couple of demos I worked on earlier this year:

Danish Radio Demo

Radiolab Demo

As the interface is largely text based I’m taking a great deal of inspiration from the elegance and simplicity of Oliver’s iAWriter. Here are a couple of rough sketches :

Edit Mode

Playback Mode

Working Together

Last week I’m happy to say that I found myself collaborating with other members of the News Lab, namely Julien Dorra and Samuel Huron, both of whom are working on related projects. These guys have some excellent ideas that relate to meta-data and mixed media that tie in with my own and I look forward to working with them in the future. Exciting stuff!

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design