Hyper Audio

Introducing the Hyperaudio Pad (working title)

Last week as part of the Mozilla News Lab, I took part in webinars with Shazna Nessa – Director of Interactive at the Associated Press in New York, Mohamed Nanabhay – an internet entrepreneur and Head of Online at Al Jazeera English and Oliver Reichenstein – CEO of iA (Information Architects, Inc.).

I have a few ideas on how we can create tools to help journalists. I mean journalists in the broadest sense – casual bloggers as well as hacks working for large news organizations. In previous weeks I have been in deep absorption of all the fantastic and varied information coming my way. Last week things started to fall into place. A seed of an idea that I’ve had at the back of my mind for some time pushed its way to the front and started to evolve.

Something that cropped up time and again was that if you are going to create tools for journalists, you should try and make them as easy to use as possible. The idea I hope to run with is a simple tool to allow users to assemble audio or video programs from different sources by using a paradigm that most people are already familiar with. I hope to build on my work something I’ve called hypertranscripts which strongly couple text and the spoken word in a way that is easily navigable and shareable.

The Problem

Editing, compiling and assembling audio or video usually requires fairly complex tools, this is compounded by the fact that it’s very difficult to ascertain the content of the media without actually playing through it.

The Solution?

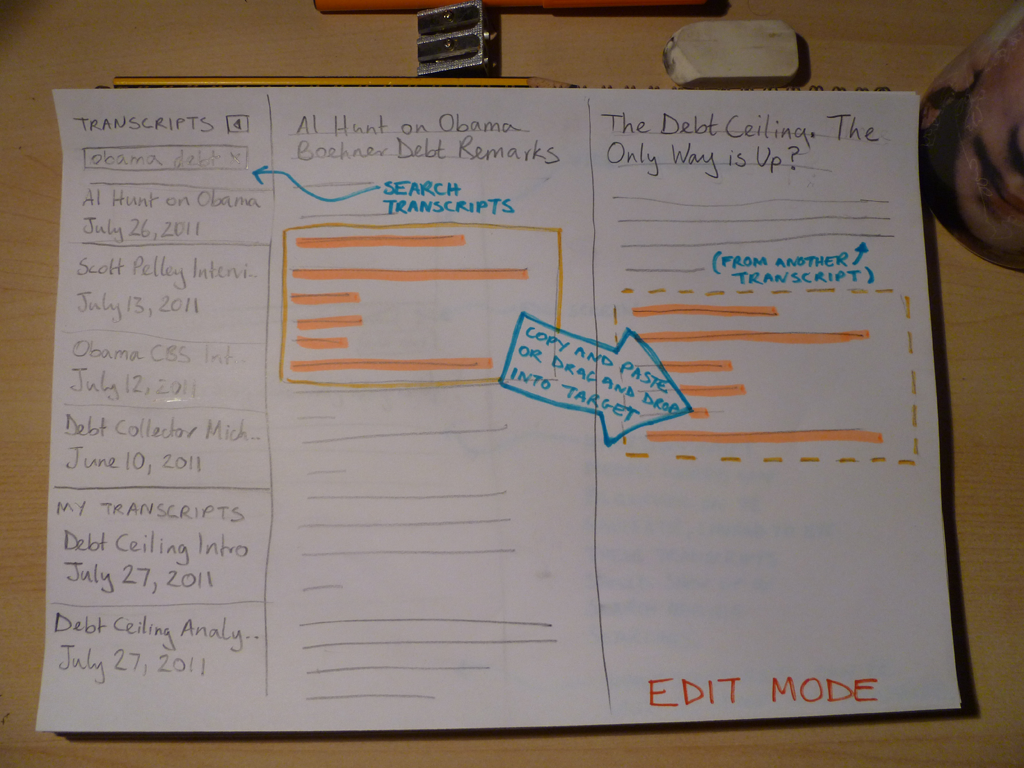

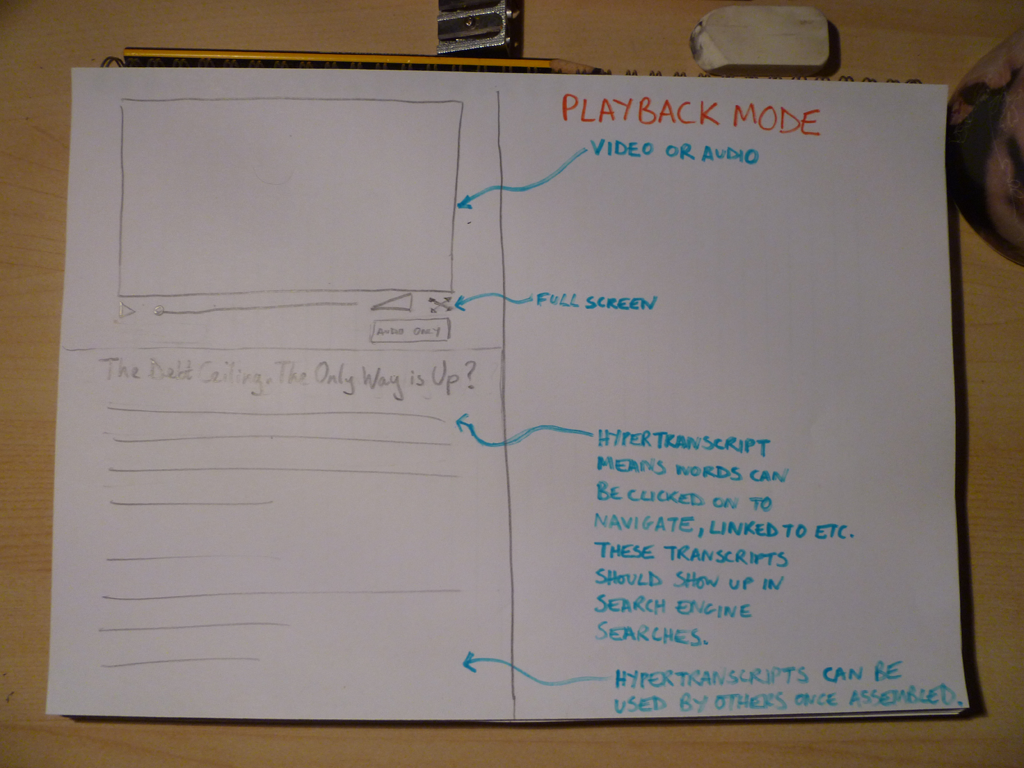

I propose that we step back and consider other ways of representing this media content. In the case of journalistic pieces, this content usually includes the spoken word which we can represent using text by transcribing it. My idea is to use the text to represent the content and allow that text to be copied, pasted, dragged and dropped from document to document with associated media intact. The documents will take the form of hypertranscripts and this assemblage will all work within the context of my proposed application, going under the working title of the Hyperaudio Pad. (Suggestions welcome!) Note that the pasting of any content into a standard editor will result in hypertranscripted content that could exist largely independently of the application itself.

Some examples of hypertranscripts can be found in a couple of demos I worked on earlier this year:

Danish Radio Demo

Radiolab Demo

As the interface is largely text based I’m taking a great deal of inspiration from the elegance and simplicity of Oliver’s iAWriter. Here are a couple of rough sketches :

Edit Mode

Playback Mode

Working Together

Last week I’m happy to say that I found myself collaborating with other members of the News Lab, namely Julien Dorra and Samuel Huron, both of whom are working on related projects. These guys have some excellent ideas that relate to meta-data and mixed media that tie in with my own and I look forward to working with them in the future. Exciting stuff!

Hyper Audio – A New Way to Interact

Recently I had the privilege of working on a very interesting project with a few folk from Mozilla – it’s the type of project I love to work on, as it involves web audio and its deep integration into the general web experience.

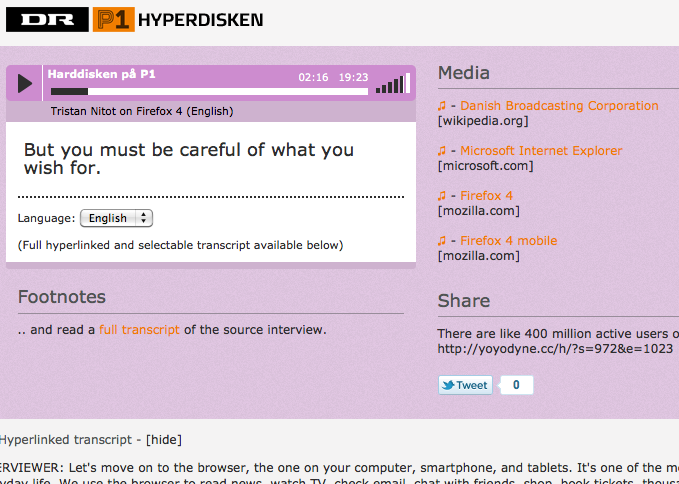

Web audio is no longer consigned to being the passive play and pause experience of yesteryear, it has the potential to be much more, it can be a driver of much richer interactions, something Henrik Moltke explores with something he dubs Hyper Audio. The remit of the project was to take various media elements of a radio interview broadcast by Danish Radio station DR; audio, subtitles, transcripts, footnotes etc and link these in an intuitive and useful manner.

To say this project was right up my street would be an understatement – this project was in my flat, raiding my fridge and drinking my beerz. I was already fascinated by the concept. I’d been playing about, creating audio related demos for a couple of years and in November last year I decided to attend the Mozilla Drumbeat festival and created a demo for the event. The demo was accepted to be exhibited at the science fair on the opening evening and garnered some interesting feedback both on and offline, what it effectively demonstrated was the synchronization and bi-directional control of text and audio.

When Henrik asked me to work on this project, I naturally jumped at the opportunity. Due to time differences, pressing deadlines and the luxury of having a nice quiet office, I stayed up late most nights for a week, happily hacking away and helped out and supported by various Mozillians and the popcorn.js community.

So that’s the back-story, here’s the demo.

Some things to try :

- Switch the audio from English to Danish – it should continue from the same point in Danish, subtitles and the transcript should also change appropriately.

- Try clicking on words in the transcript – the audio should start playing from the corresponding point.

- Highlight a passage of transcript text – this should add a tweetable excerpt to the ‘share’ box. The URL included should just play that part of the audio.

- Clicking the music note icons in the ‘media’ box should take you to the point of the audio where that resource was mentioned.

How did we achieve this? We used popcorn.js to display subtitles, footnotes and other time-related resources. In fact a lot of this was already in place when I picked up the project. I then integrated jPlayer for the audio playback and deeper interaction. Popcorn allows us to associate timings with actions and have these actions triggered by media when they hit said timings. So pretty much perfect for our needs. jPlayer provided a solid abstraction above the native audio API, it allowed me to easily synchronize and switch audio tracks and jump to specific points or sections in the audio, with very few lines of code. Importantly it also protected us from any cross-browser issues and allowed our designers to effortlessly create a custom skin for the player.

So this was the control, but what about the media? Well this part was a massive team effort. Henrik managed to provide a very accurately timed transcript. We had hoped to use the subtitles in SRT format but for convenience we parsed them or rather Scott Downe parsed them into JSON format.

One of the bigger issues we encountered was that we only had the transcript in English and the timings for the Danish transcript were naturally different. Luckily we had accurately timed Danish subtitles and legendary Bobby Richter on hand to convert the subtitles to individual words complete with their timings, which he did by cunningly interpolating the timing of words (based on word length) and based on their in-subtitle position. All knocked out in about 10 minutes and in 20 lines of code. It worked surprisingly well, of course you need to be able to understand Danish to truly tell. We could have probably parsed the subtitles into the transcript on the fly but due to time limitations we made them static.

Perhaps an aside not directly related to audio, I managed to hack together some code that allowed highlighted transcript text to be placed in the ‘share’ box, and grab the timings of the first and last words, from there it was pretty much straightforward to make this excerpt tweetable.

This whole endeavor was very much a group effort, a huge thanks to the popcorn.js team, who made joining their IRC feel like walking into a pub full of friends.

Special credit and thanks then should go to Scott Downe, Bobby Richter, Barry Threw, David Humphrey, Brett Gaylor, Ben Moskowitz, Christian Valentiner, Silvia Benvenuti and of course Henrik ‘Tank’ Moltke whose baby all this was. It was great being part of such a talented team. Awesomesauce indeed.

Mark B

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design