HTML5

Hyper Audio – A New Way to Interact

Recently I had the privilege of working on a very interesting project with a few folk from Mozilla – it’s the type of project I love to work on, as it involves web audio and its deep integration into the general web experience.

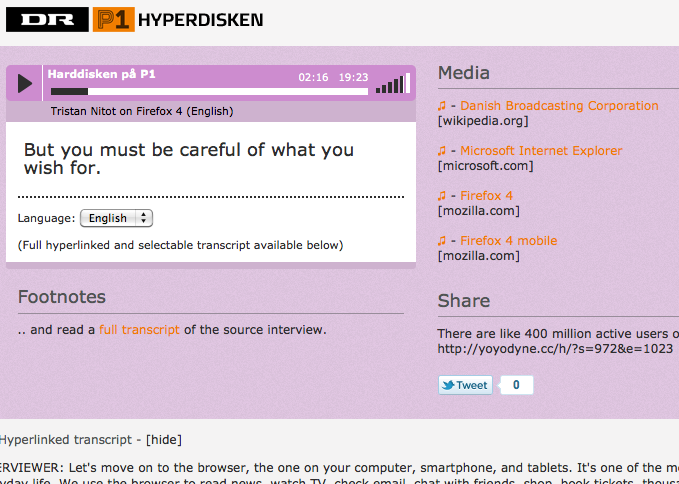

Web audio is no longer consigned to being the passive play and pause experience of yesteryear, it has the potential to be much more, it can be a driver of much richer interactions, something Henrik Moltke explores with something he dubs Hyper Audio. The remit of the project was to take various media elements of a radio interview broadcast by Danish Radio station DR; audio, subtitles, transcripts, footnotes etc and link these in an intuitive and useful manner.

To say this project was right up my street would be an understatement – this project was in my flat, raiding my fridge and drinking my beerz. I was already fascinated by the concept. I’d been playing about, creating audio related demos for a couple of years and in November last year I decided to attend the Mozilla Drumbeat festival and created a demo for the event. The demo was accepted to be exhibited at the science fair on the opening evening and garnered some interesting feedback both on and offline, what it effectively demonstrated was the synchronization and bi-directional control of text and audio.

When Henrik asked me to work on this project, I naturally jumped at the opportunity. Due to time differences, pressing deadlines and the luxury of having a nice quiet office, I stayed up late most nights for a week, happily hacking away and helped out and supported by various Mozillians and the popcorn.js community.

So that’s the back-story, here’s the demo.

Some things to try :

- Switch the audio from English to Danish – it should continue from the same point in Danish, subtitles and the transcript should also change appropriately.

- Try clicking on words in the transcript – the audio should start playing from the corresponding point.

- Highlight a passage of transcript text – this should add a tweetable excerpt to the ‘share’ box. The URL included should just play that part of the audio.

- Clicking the music note icons in the ‘media’ box should take you to the point of the audio where that resource was mentioned.

How did we achieve this? We used popcorn.js to display subtitles, footnotes and other time-related resources. In fact a lot of this was already in place when I picked up the project. I then integrated jPlayer for the audio playback and deeper interaction. Popcorn allows us to associate timings with actions and have these actions triggered by media when they hit said timings. So pretty much perfect for our needs. jPlayer provided a solid abstraction above the native audio API, it allowed me to easily synchronize and switch audio tracks and jump to specific points or sections in the audio, with very few lines of code. Importantly it also protected us from any cross-browser issues and allowed our designers to effortlessly create a custom skin for the player.

So this was the control, but what about the media? Well this part was a massive team effort. Henrik managed to provide a very accurately timed transcript. We had hoped to use the subtitles in SRT format but for convenience we parsed them or rather Scott Downe parsed them into JSON format.

One of the bigger issues we encountered was that we only had the transcript in English and the timings for the Danish transcript were naturally different. Luckily we had accurately timed Danish subtitles and legendary Bobby Richter on hand to convert the subtitles to individual words complete with their timings, which he did by cunningly interpolating the timing of words (based on word length) and based on their in-subtitle position. All knocked out in about 10 minutes and in 20 lines of code. It worked surprisingly well, of course you need to be able to understand Danish to truly tell. We could have probably parsed the subtitles into the transcript on the fly but due to time limitations we made them static.

Perhaps an aside not directly related to audio, I managed to hack together some code that allowed highlighted transcript text to be placed in the ‘share’ box, and grab the timings of the first and last words, from there it was pretty much straightforward to make this excerpt tweetable.

This whole endeavor was very much a group effort, a huge thanks to the popcorn.js team, who made joining their IRC feel like walking into a pub full of friends.

Special credit and thanks then should go to Scott Downe, Bobby Richter, Barry Threw, David Humphrey, Brett Gaylor, Ben Moskowitz, Christian Valentiner, Silvia Benvenuti and of course Henrik ‘Tank’ Moltke whose baby all this was. It was great being part of such a talented team. Awesomesauce indeed.

Mark B

P2P Web Apps – Brace yourselves, everything is about to change

The old client-server web model is out of date, it’s on its last legs, it will eventually die. We need to move on, we need to start thinking differently if we are going to create web applications that are robust, independent and fast enough for tomorrow’s generation. We need to decentralise and move to a new distributed architecture. At least that’s the idea that I am exploring in this post.

It seems to me that a distributed architecture is desirable for many reasons. As things stand, many web apps rely on other web apps to do their thing, and this is not necessarily as good a thing as we might first imagine. The main issue being single points of failure (SPOF). Many developers create (and are encouraged by the current web model to create) applications with many points of failure, without fallbacks. Take the Google CDN for jQuery for example – no matter how improbable, you take this down and half the web’s apps stop functioning. Google.com is probably the biggest single point of failure the world of software has ever known. Not good! Many developers think that if they can’t rely on Google, then who can they rely on? The point is that they should not rely on any single service, and the more single points you rely on, the worse it gets! It’s almost like we have forgotten the principles behind (and the redundancy built into) the Internet – a network based on the nuclear-war-tolerant ARPANET.

I believe it’s time to start looking at a new more robust, decentralised and distributed web model, and I think peer-to-peer (P2P) is a big part of that. Many clients, doubling as servers should be able to deliver the robustness we require, specifically SPOFs would be eliminated. Imagine Wikileaks as a P2P app – if we eliminate the single central server URL mode and something happens to wikileaks.com, the web app continues to function regardless. It’s no coincidence that Wikileaks chose to distribute documents on bittorrent. Another benefit of a distributed architecture is that it scales better, we already know this of course – it’s one of the benefits of client-side code. Imagine Twitter as a P2P web app, imagine how much easier it could scale with the bulk of processing distributed amongst its clients. I think we could all benefit hugely from a comprehensive web based P2P standard, essentially this boils down to browser-built-in web servers using an established form of inter-browser communication.

I’m going to go out on a limb here for a moment, bear with me as I explore the possibilities. Let’s start with a what if. What if, in addition to being able to download client-side JavaScript into your cache manifest you could also download server-side JavaScript? After all what is the server-side now, if not little more than a data/comms broker? Considering the growing popularity of NoSQL solutions and server-side JavaScript (SSJS) it seems to me that it wouldn’t take much to be able to run SSJS inside the browser, granted you may want to deliver a slightly different version than runs on the server, and perhaps this is little more than an intermediary measure. We already have Indexed Database API on the client, if we standardise the data storage API part of the equation, we’re almost there, aren’t we?

So let’s go through a quick example – let’s take Twitter again, how would this all work? Say we visit a twitter.com that detects our P2P capabilities and redirects to p2p.twitter.com, after the page has loaded we download the SSJS and start running it on our local server. We may also grab some information about which other peers are running Twitter. Assuming the P2P is done right, almost immediately we are independent of twitter.com, we’ve got rid of our SPOF. \o/ At the same time if we choose to run offline we have enough data and logic to be able to do all the things we would usually do, bar transmit and receive. Significantly load on twitter.com could be greatly reduced by running both server-side and client-side code locally. To P2P browsers, twitter.com would become little more than a tracker or a source of updates. Also let’s not forget, things run faster locally ![]()

Admittedly the difference between server-side and client-side JS does become a little blurred at this point. But as an intermediary measure at least, it could be useful to maintain this distinction as it would provide a migration path for web apps to take. For older browsers you can still rely on the single server method while providing for P2P browsers. Another interesting beneficial side-effect is that perhaps we can bring the power of ‘view-source’ to server-side code. If you can download it you can surely view it.

The thing that I am most unsure about is what role HTTP plays in all this. It might be best to take an evolutionary approach to such a revolutionary change. We could continue to use HTTP to communicate between clients and servers, allowing the many benefits of a RESTful architecture, restricting the use of a more efficient TCP based comms to required real-time ‘push’ aspects of applications.

It seems to me that with initiatives such as Opera Unite (where P2P and a web server are already being built into the browser) and other browser makers looking to do the same, this is the direction in which web apps are heading. There are too many advantages to ignore: robustness, speed, scalability – these are very desirable things.

I guess what is needed now is some deep and rigorous discussion and if considered feasible, standards to be adopted so all browsers can move forward with a common approach for implementation. Security will obviously feature highly as part of these discussions and so will common library re-use on the client.

If web apps are going to compete with native apps, it seems that they need to be able to do everything native apps do and that includes P2P communication and enhanced offline capability, it just so happens that these two important aspects seem to be linked. Once suitable mechanisms and standards are in place, the fact that you can write web applications that are equivalent or even superior to native apps, using one common approach for all platforms, is likely to be the killer differentiator.

Thanks to @bluetezza, @quinnirill, @getify, @felixge, @trygve_lie, @brucel and @chrisblizzard for their inspiration and feedback.

Further reading : Freedom In the Cloud: Software Freedom, Privacy, and Security for Web 2.0 and Cloud Computing, The Foundation for P2P Alternatives, Social Networking in the cloud – Diaspora to challenge facebook, The P2P Web (part one)

HTML5 Media, Seeking and the Buffered Attribute

It can be very exciting playing with new technologies, HTML5 media being a case in point. The spec is still evolving and although native audio and video have only been about for little over a year in any useable form, we are already seeing browsers makers pushing the envelope and developers rushing to create new libraries.

We aim to incorporate features into the jPlayer library as they become available. Recently we have been looking at browser’s ability to jump to a point in a track that has not yet downloaded. A seeking of sorts. All the major HTML5 supporting browsers allow this type of seek (with the exception of Safari for Windows), but at the moment it seems only Chrome and Safari (both mobile and desktop versions) have taken this a step further by implementing the buffered attribute, although Firefox 4 also does, it is still in beta.

The buffered attribute allows us to determine what parts of a media track have been buffered so that we can seek or skip directly to that part without the need to pause.

More info on the buffered attribute can be found in this article HTML5 video ‘buffered’ property available in Firefox 4

Mark B

Spreading Love and the Load with HTML5

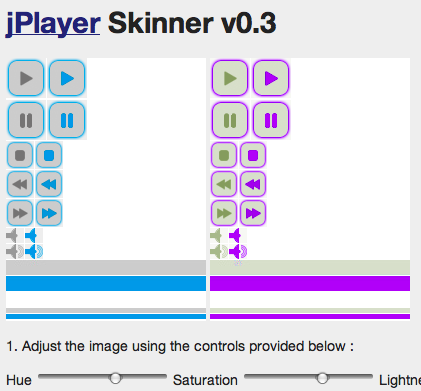

A couple of weeks ago and I came across an interesting HTML5 based experiment. Canvas based image manipulation by Patrick H. Lauke. Thinking this could be quite useful if we could save the resulting image, I cobbled it together with Jacob Seidelin’s Canvas2Image and suddenly I had a working prototype of a jPlayer Skinner.

If we were to try and create something similar without using HTML5 we would have needed either to use Flash or manipulate the image on the server. (Let’s just pretend Flash doesn’t exist for the purposes of this post). Even if you put to one side the added complexity of creating and integrating the serverside code, it makes much more sense to do this kind of manipulation clientside – the load is perfectly distributed, in fact all the server has to think about is serving up plain old text.

This is a huge win for HTML5 and one that not many people are talking about. Increasingly we are using client’s CPUs as the workhorse for complex activities that previously could only be achieved on the server. This means more efficent web apps, less traffic between client and server and fewer cycles being consumed on the server.

In short HTML5 helps web apps scale.

HTML5 – The Revolution will not be Televised

The Seeds of Change

There’s a lot of buzz being generated about HTML5 just now and I for one welcome the discussion that it has provoked. I’d always kept half an eye on the ongoing controversy regarding whether it was better to use the XHTML or HTML 4.01 standard. To add fuel to this fiery debate, as HTML5 gained traction it was announced that work on XHTML 2 spec would be discontinued. Many people felt that this vindicated the HTML 4 camp’s arguments and that XHTML was dead. The truth of course was slightly more complicated as HTML5 can be reasonably presented as XHTML. Either way we now seem to have one standard to unite behind which brings us closer to designer’s HTML utopia, where markup can be written once and work across all browsers. I believe a critical point has been reached.

So what advantages does HTML5 offer? Well it basically provides an open framework for a richer user experience. To name a few features without creating a huge list; it supports audio, video, vector based graphics and animation, geolocation and drag and drop. Check out the spec for more info.

Browser vendors are only now starting to implement some of the HTML5 features within their latest and greatest releases. Safari 4, Firefox 3.5, Chrome 3 and even Opera 10 to a greater or lesser extent now support HTML5. Internet Explorer being the obvious ‘elephant in the room’. It’s true that the HTML5 spec has yet to be finalised and depending on who you believe will not be finalised until 2022, but it seems this is less important than it sounds. What we are starting to see is something relatively new, the web development community getting behind a standard and actively pushing it forward. Control lies, now more than ever in the hands of web developers, the end-users, if you like, of the standards. It might seem futile to adopt a standard before it is finished, especially given that probably less than 10% of the installed browser base is currently taking advantage of it in any meaningful manner, but it’s worth considering that Google have adopted HTML5 as the markup of choice for their up and coming Wave product and also consider that Webkit is now starting to support HTML5 and that it is the rendering engine used by Chrome, Android, Safari and PalmPre OS and presumably the recently announced Google OS.

Corporation and Community

It seems that it’s not so much about the corporations anymore, more about the community. Not all browsers support HTML5, so what does the community do about it? It creates ‘patches’ for these browsers. These patches are usually written in JavaScript and aim to introduce HTML5 compliance to browsers that don’t support it. HTML5 introduces many new aspects and behaviour and various authors are working on different aspects of the spec, Dean Edwards is making fantastic progress on making Web Forms 2.0 work across all browsers. Erik Arvidsson has done some great work creating a library for emulating the Canvas tag on Internet Explorer as have others, Jacob Rask is working on HTML5 CSS Reset and then there’s people like us who hope to make contributions to the smaller aspects of the HTML5 spec such as audio. This isn’t a unified effort, at least not yet. But a common binding force that unites them is that they are all Open Source solutions, so anyone could come along, bundle them together and create a comprehensive patch for any browser. Of course the more comprehensive the support the more complex things become and I imagine Internet Explorer 6 support is the worst case scenario.

The Fly in the Ointment

To diverge and talk about Internet Explorer 6 for a moment. IE6, to put it kindly, does things ‘in its own unique way’, and for this reason is the bane of many web designer’s lives. Some time ago I was experimenting with creating custom tags for IE6 and found out that although it is possible to implement them, it goes about this completely differently to any other browser. I’m guessing that being able to deal with custom tags is essential if you have to deal with tags that aren’t implemented in a particular browser. I’m not sure whether the current crop of ‘patches’ are supporting IE6 but I can certainly imagine that if they do, they’ve had to go around the houses to do so. IE6 unfortunately has a large install base inside many large corporations. Many companies rolled out intranet applications when IE6 was standard on the corporate desktop and so were targeted specifically to that one browser. It’s hard for a company to justify re-writing these applications to work on any other browser. The “if it ain’t broke – don’t fix it” mentality is a strong one, especially where spending money is concerned. While web sites and internet based applications continue to work, corporations will have no incentive to upgrade or provide another browser for surfing the web with. There are signs though that this will change in the near future. Whole movements have sprung up against IE6, large and established sites have discontinued support or are thinking seriously about dropping it. The IE6 legacy cannot go on for ever.

There is however a price to pay for using these ‘patches’. Undoubtedly browsers that don’t support HTML5 natively will run more slowly using the patches to support it. They do anyway you might counter – people use them just the same. JavaScript is light, it can be compressed, it can be cached, it can be hosted on CDNs. I personally don’t think this a real issue and if users find that it is, they can always upgrade. Accessibility is the other key issue that needs to be addressed, but I will leave that for another discussion.

May we Live in Interesting Times

If you think about it for a minute, what is going on now, happening right under our very noses is nothing short of a revolution, a seismic shift in power towards the community and away from the browser vendors, the consequences of which cannot be underestimated. The W3C has loosened its grip on the HTML specs and we now have the WHATWG community, and it appears that most browser makers are listening attentively to the new combined web design and standards community. It’s all about the community. It doesn’t seem to even matter when a spec will be finalised, significant chunks of it are being implemented now, people are using them and the community is developing for them. Slowly but very surely we are approaching web designer’s nirvana, where not only does a modern markup language incorporating many new and needed features finally exist, but importantly an environment is being created where designers have the possibility to implement these features once, knowing that in some way or another, they can make them work on all target browsers. All of this powered by the web development community who are finally taking control of their own destiny.

¡Viva La Revolución!

Further reading:

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design