BBC

Breaking Out – Web Audio and Perceptive Media

By Mark Boas

Sometimes when we take on projects we don’t really know what we’re letting ourselves in for. To fully know, we’d spec things out to the ninth degree and who wants to or has time for that? Well people like NASA do, but we don’t.

When we were given the opportunity to work on something called Perceptive Media all I saw was a colourful but amorphous form ahead and although it was explained to me (as well it could be) I still didn’t have a real clue of what it would end up being and crucially what was involved in making it a reality.

But hey it’s the BBC right? You don’t often get to work with the BBC – you know that BBC you spent so much time watching and listening to when you were growing up. The same BBC who make Doctor Who ? – That BBC!

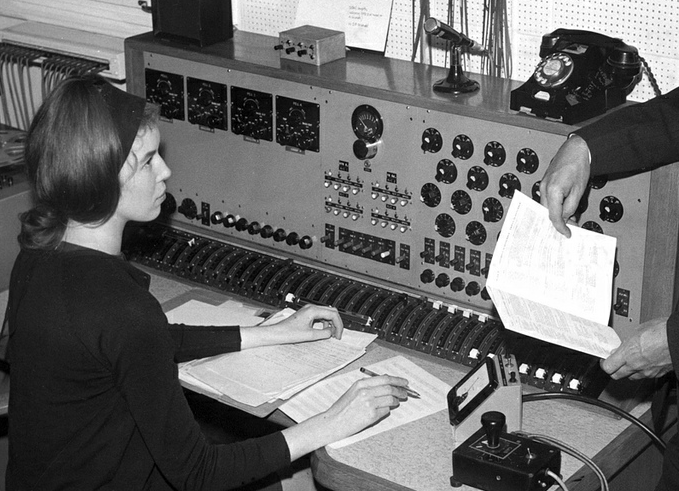

Time-lords aside (or not actually as the case may be), being a bit of an audio-geek I’ve always been fascinated by the BBC’s audio output especially their Radiophonic Workshop especially Delia Derbyshire’s work and this was an audio gig coming from the R&D department of the same organisation. How could we turn it down?

So I jumped at the chance without really knowing what lay in store, which incidentally is very unfair on my colleague who ended up doing the lion’s share of the work.

The brief went something like this : We want to create a web-audio based demo that will adjust its content to the listener, based on information we can ascertain about them. Oh and we want it to sound as natural as possible so that the listener may not suspect that the content is being tailored. I soon found out that this involved generating audio on-the-fly and applying convolution reverb and other effects so that audio sounded natural in various environments. What this all meant is that we needed to use an advanced audio API. Now I’ve had fun investigating advanced audio APIs before, they differ from the standard audio APIs by being designed to allow you not only to play audio files, but to generate and alter audio.

This is exciting because we are finally getting around to doing stuff with audio that we have been doing with text for years. It also crosses over with something I’m looking at called Hyperaudio.

Experimentation

So with this cross-over in mind, I felt I could manage to find the time to experiment and create some proof of concept demos and this lead me to try out the following libraries:

The Speak.js demo pretty much demonstrates what it can do. You put text in and speech comes out. The library itself is ported from eSpeak using something called Emscripten and actually allows you to generate audio pretty much in real-time by constructing a data URI in WAV format.

We wanted it to work well on all browsers that support some advanced audio API. Firefox’s Audio Data API adopts a more low-level approach but in theory at least, you should be able to do anything that Webkit’s Web Audio API does, but with raw JavaScript. AudioLib.js provides the libraries to do this and also abstracts the differences between the two APIs so that you can write one set of code for both.

Unfortunately time and budget restrictions meant that we couldn’t create the version we wanted to make – a version that would work similarly in all browsers that provided an advanced audio API. As it seems that the future W3C standard for advanced audio will be based on the Web Audio API we decided to concentrate on that.

Personalisation

The data we use to personalise the broadcast comes from a number of sources. The main differentiator is the listener’s location which we use via the geo-location API to determine, local weather, radio streams and landmarks which are then subtly inserted into the audio stream. The only restriction is that you must be in the UK to really encounter the differences – this is partly due to the fact that we use some BBC resources that are only available for the UK but also so that we can keep the data manageable for what, after all, is just a demo.

The second source of information about the listener came from a slightly more sinister place. For fun I’d been working with a good friend of mine Matteo Spinelli on a project called Underpants and while looking at the issue of browser traceability across websites we figured out how to determine which social networks a browser is logged into. Cross-over struck once more and we used this technique to personalise the part where our outdoor-challenged hero is urged to log out of her favourite social network and leave the apartment.

Try the demo!

Advanced Web Audio

So what changes between the version of the broadcast that uses the Web Audio API and the fallback version that doesn’t? Well there are a number of factors, some of them quite subtle. Speak.js outputs a robot style voice which is fine for our use as the electronic voice of the lift. But we wanted to make sure it would fit in properly to the various environments in which it was set. To do this we created something called a convolution-reverb. In short, a this reverb allows us to apply the right sort of audio ambiance to a sound. So if a sound is coming from a lift we apply a lift type echo. We also apply the same ‘echo’ to the streamed radio broadcast that is played at a certain point in the broadcast.

The fact that we are using an advanced audio API also enables us to add various other effects to other pieces of audio. However we soon found that we needed to be sensible with our audio design, since convolutions with the Web Audio API does take a up a fair amount of CPU. During a development error, a unique convolution was used for each sound, this was found to start failing at around the 14th.

We also made use of audio filters, for example the radio podcast uses a high-pass filter applied to it which makes it sound ‘tinny’, another example is when Harriet opens the her apartment door at the start we apply both filters and faders. The Web Audio API uses a node based approach which means that you can feed the results of one effect into the next so we can apply filters, faders and convolutions to any audio source. To achieve all of this we made heavy use of Web Audio API’s AudioParam which allows nearly any attribute to be changed using handy linear transform effects – we used this to fade in and out, or cross-fade between filtered and unfiltered outputs.

So the Web Audio API version applies filters, faders and convolutions to the audio whereas the standard HTML5 audio versions do not. That’s not to say that given enough time we couldn’t have achieved the same effect using the Audio Data API included in Firefox. But since the new audio standard is slated to be largely based on the Web Audio API it was decided for the purposes of this demo to concentrate our energy in this area.

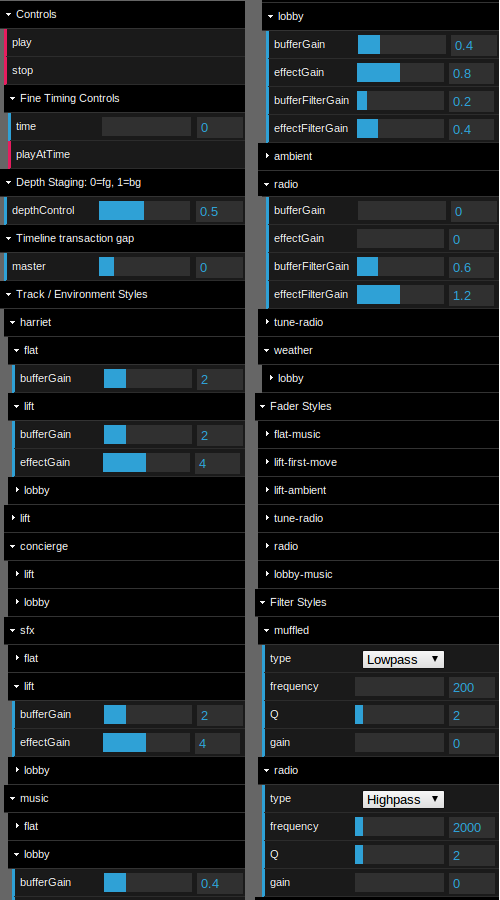

Once we’d got the core of the functionality working, we set to work on creating a control panel to allow us to tweak every single one of the volumes, filters, faders and convolutions.

We wanted to be able to demonstrate to editors of audio how we could tweak pace, reverb and sound-effects in real-time and although requiring a complete code re-factor we hope that the fact that the whole thing is pretty-much customisable makes this a powerful demo and will be useful to others as well as ourselves who are dabbling in this area.

So this is a good time to mention that all the source-code is open source and that you can grab it from the following GitHub location https://github.com/happyworm/PerceptiveMedia.

Conclusions

I think this was definitely an interesting and worthwhile experiment. However as its aim was to be subtle, it purposely does not make immediately clear the potential of the technology. Technically what we are able to achieve turned out to be a kind of audio framework to allow the ability to create and tweak audio as it’s being played. This is useful to producers of audio to see how effects and timings can alter the experience and is especially useful for applications such as games where perhaps you want to give your sound-effects context. I also feel that these techniques could be used for applications such as dynamic story-telling. My daughter — all too often — asks me to tell her a story featuring robots, dinosaurs and goblins and all too often I fall back on the same old principles and formulas of children’s story-telling, the rule of three and so on. Post happy-ever-after she often wants me to add to the story, getting me to fill-in or clarify some of the details. It’s not a huge stretch to imagine that we could create dynamic storytelling applications for kids. A pinch of AI here, some personalisation there and a heavy dose of randomness might just be enough to keep them happy for a bit.

So there we have it, one small step closer to the old Star Trek computer (Did Doctor Who’s have a voice interface? I forget). We’ve already seen the application of voice input with software such as Siri. It shouldn’t be too much longer until audio interfaces start to become common-place, with so much current emphasis on the visual I think this could be quite refreshing.

Epilogue

Question. What’s harder to debug than an intermittent bug? Answer. An intermittent bug that only manifests itself when you deploy to the server. Crazy I know and totally unexpected to us and for this reason you may see issues when running on Firefox (but not Opera). Being supporters of both Mozilla and Firefox we were much dismayed by this bug and spent a significant amount of time trying to get to the bottom of it. Unfortunately due to its nature we were only able to put in a loose bug-report If anybody wants to help us solve this issue please feel free to take a look, even if it’s just to download the application from GitHub and verify that it works locally for you.

Thanks then to Ian Forrester and Tony Churnside for the opportunity to work with them and their team at BBC R&D, also of course Sarah Glenister for the excellent script and Angie Chan for the great artwork. Jussi Kalliokoski for helping us work with AudioLib.js But most of all I want to thank my colleague Mark Panaghiston for working tirelessly behind the scenes not only on the significantly challenging audio aspects of the project but also go above and beyond in integrating the visual aspects and even sourcing and setting up the hosting.

Related Articles

Writing for Perceptive Media

Illustrations for BBC R&D’s Perceptive Media Demo: Breaking Out

What is Perceptive Media?

BBC demonstrates revolutionary ‘perceptive media’

Perceptive Media Launch at Social Media Cafe Manchester

The BBC unveils its first ‘Perceptive Media’ experiment – and you can try it now

What is Perceptive Media?

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design