Hyperaudio at the Mozilla Festival

This year has run contrary to previous years of my life in the sense that it seems to have lasted much longer than the year before. This may have something to do with a small addition to the family that arrived in March and the consequent ramping up of waking hours, but I prefer to think it’s because things are moving so quickly in that wonderful place we call the worldwide web, that it seems that too much has happened to compress into a year.

It was only a year ago for example, when I started tinkering with jPlayer to couple text and audio and was asked to show off a very small demo at the first Mozilla Festival in Barcelona last year.

A few months later I was introduced to and became involved with something known as Hyperaudio and was given the opportunity to create a couple of proof-of-concept demos : Denmark Radio’s Hyperdisken Demo and the Radiolab/Soundcloud collaboration ( a shout-out to Henrik Moltke for doing much of the groundwork for these demos and my colleagues at Happyworm without which they just wouldn’t have been possible).

Further on through the arc of this epic year, I took part in the Mozilla Knight Journalism challenge where I was encouraged to research and blog some ideas on a tool I called the Hyperaudio Pad. Happily I was flown over to Berlin with other like minded MoJo peeps, for a week of intense discussion, collaboration and hacking and managed to get the first semblance of an actual product out of the door.

Which brings me in a round-about way to this year’s Mozilla Festival, where I will again be demoing and chatting about the coupling of text and media and the other things that Hyperaudio is (or could be) about. This all at the Science Fair on Friday, 4th of November and a Hyperaudio Workshop / Design Challenge on Saturday, 5th.

The Science Fair will be all about demos, chatting to people interested in the general Hyperaudio concept and making sure I don’t spill my drink over my laptop. Meanwhile in the workshop we hope to put the Hyperaudio Pad through it’s paces and actually make something with it, while gathering ideas and maybe hacking on some of them into the bargain. It should be a fun mix of hacks, hackers, designers, audio buffs and basically anybody curious enough to be involved. The session will be fairly free flow but the plan so far is to:

- Briefly chat about and demo the Hyperaudio concept, what it can do and with whom we can collaborate.

- Breakout into groups to come up with new ideas, applications and designs.

- Reconvene and talk about the group’s ideas.

- Breakout into groups to work on these ideas, whether it is creating a programme, UI mockups, story-boarding functionality or even developing small demos.

- Creating something we can show other people – hopefully a program created with the Hyperaudio Pad but also some new ideas.

Hopefully we can cram this all into 3 hours. Folk from the BBC, Universal Subtitles, Sourcefabric and other cool people are planning to be in attendance, which should make for some interesting crossovers and fantastic opportunities to consult and maybe even influence organisations in a position to use the stuff we’re making. Additionally the BBC are providing us with some fantastic media to work with.

All will be revealed over the weekend, book your place and get your eyes and ears ready to be part of the Hyperaudio experience.

The Hyperaudio Pad – a Software Product Proposal

Imagine

Imagine that making your own video or audio news programs was really easy.

Imagine if you could pull together news stories from various sources, combine them and publish the results in minutes.

Imagine that the story you made came complete with a transcript, was fully accessible and could be picked up by search engines.

Imagine that you could easily embed your story into a web page and that others could incorporate your story into new stories.

Imagine that viewers of you story could share any part of it in seconds.

Imagine all this and you are imagining the functionality of the Hyperaudio Pad.

Listen to a use case.

Watch the screencast.

Hypertranscripts

Currently, the process of putting together a media program, the process will probably involve some fairly complex audio/video editing software. The result – a fairly ‘static’ representation. The whole process is time consuming, wasteful and inflexible.

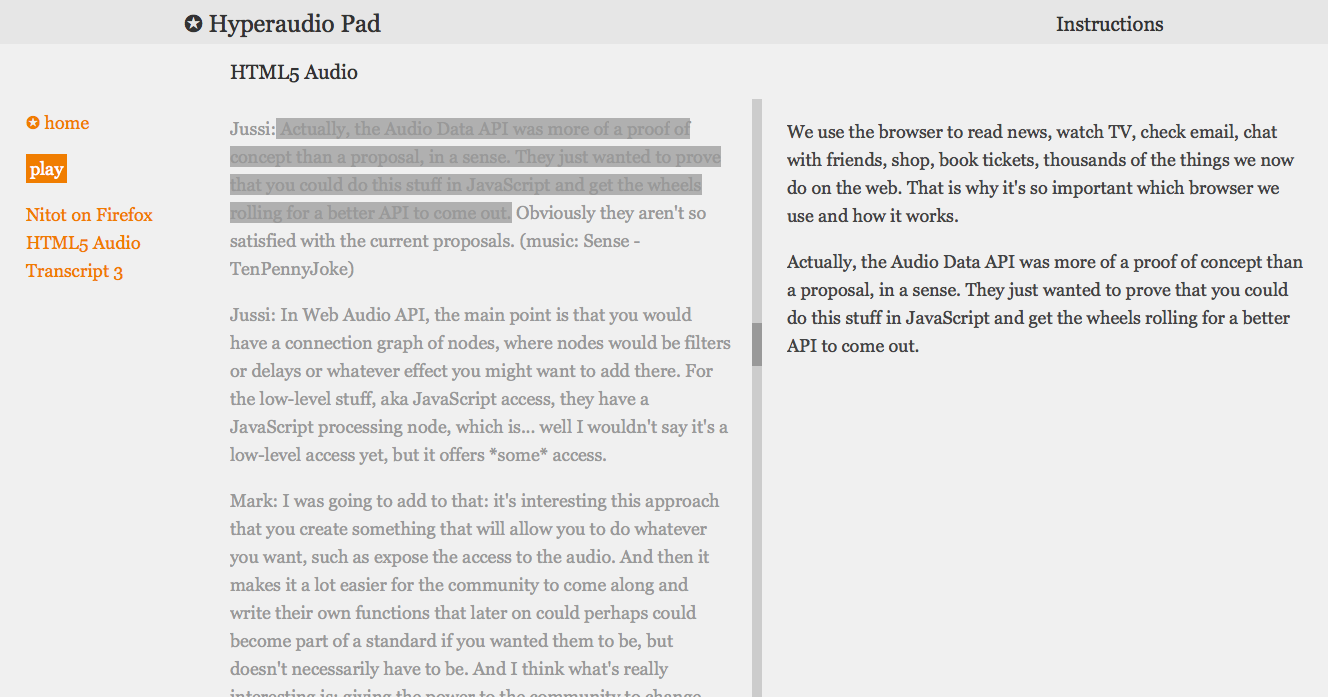

By changing the way we think about media, we can create a new way of manipulating it. The Hyperaudio Pad allows quick manipulation of media and takes advantage of a form of media representation referred to as a hypertranscript.

When we tell a story we invariably use the spoken word, the spoken word can be transcribed, and once transcribed can be used as an accurate reference to the media it is associated with.

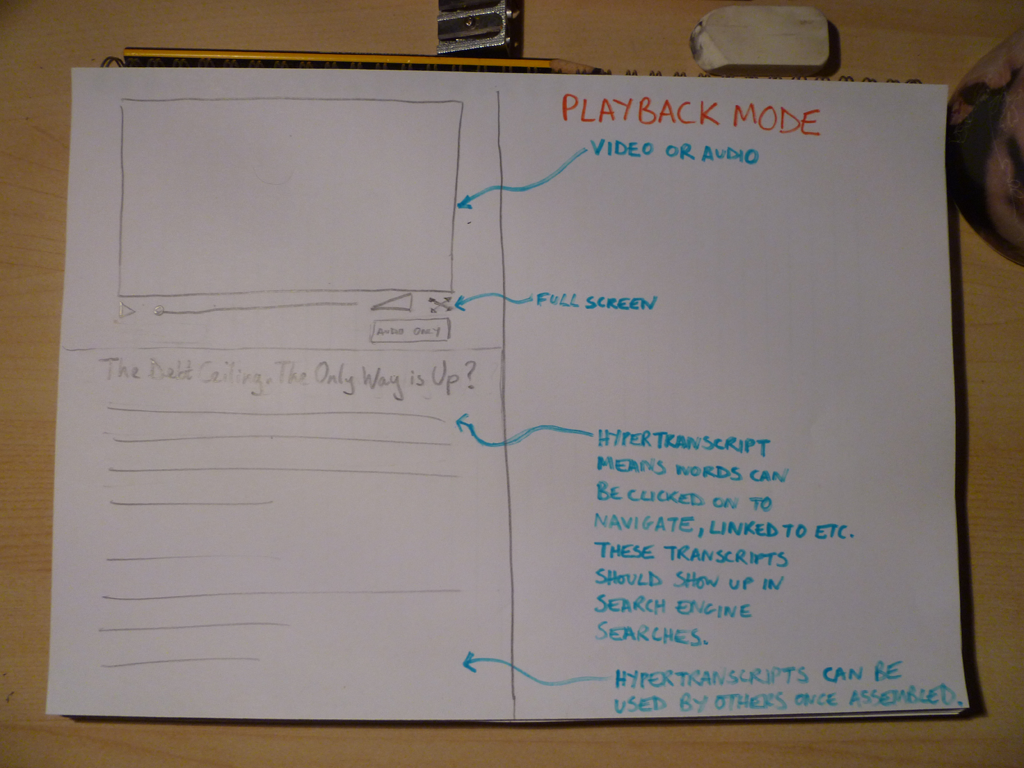

Hypertranscripts are part of a broader concept dubbed Hyperaudio by Henrik Moltke, they are transcripts hyper-linked to the media they represent, a type of fine-grained subtitle, they are separate entities from the media they describe, existing as a word-level aligned references expressed in HTML.

Tools and services exist to create Hypertranscripts, word level timing can even be approximated from subtitles. In the past I have used libraries such as Popcorn.js, jPlayer and data from the Universal Subtitles project.

The good news is that Hypertranscripts already exist, here are a few examples:

Screencast [youtube] of the Danish Radio Demo

Screencast [youtube] of the Radiolab Demo.

Quick and simple demo I put together.

Demos by others include: Minnesota Public Radio Demo, Voxallead News and Liris Interactive Transcript

Using Hypertranscripts we can:

- Navigate through the media via the text.

- Select and play parts of the media by highlighting the relevant parts of the text.

- Share parts of the media creating URLs that contain start and end points.

- Make our media more discoverable (via search engines)

- Make our media more accessible.

- More easily visualize the media.

- Combine, embed and easily integrate media (using simple tools)

The Hyperaudio Pad

The Hyperaudio Pad is a tool to allow users to easily assemble spoken word based audio or video from Hypertranscripts.

It will allow users to create their media by simply copying and pasting text from existing transcripts into a new transcript. These Hypertranscripts link to the actual media that they transcribe, so when parts of the transcripts are copied the references to their associated media are also copied.

Note that the media itself is not copied, just references to parts of it, the result is mash-up of video or audio and an associated representative transcript.

The tool will be :

- Web based, cross-browser, cross-platform (work on mobile devices) and open source.

- Simply presented, taking cues from minimalist distraction-free tools such as iAWriter.

- Intuitive and extremely easy to use, taking advantage of the text editing paradigm.

- Extensible – written in such a way that it can easily be built upon.

The interface should be clear and minimal, the user can search for and choose from existing hypertranscripts, open them, play them and copy parts of them to their ‘document’ all from within the same page.

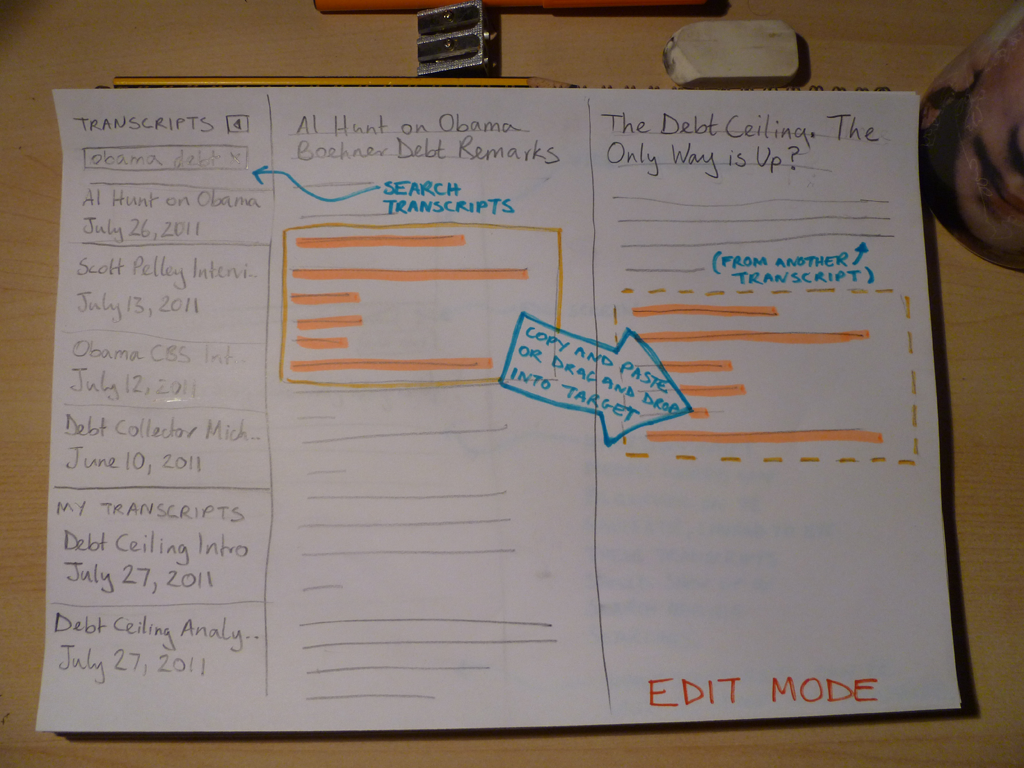

Edit Mode

Playback Mode

Who is this tool for?

- Newsroom journalists who need to very quickly assemble media from different existing sources and perhaps adapt the result as news unfurls.

- Podcasters, citizen journalists and mediabloggers who want a free and easy way of putting together media programs or newscasts.

- Anyone who wants their resulting media programs to include a transcript for increased accessibility and visibility.

- Anybody who is happy for their results to be taken apart, re-combined or referenced by others.

How does it work ?

Hypertranscripts are essentially defined in HTML. The simplest possible example for the use by a tool such as the Hyperaudio Pad could be:

WORD-FROM-THE-TRANSCRIPT

Example:

burgeoning

Note, we can add to this format should we wish to include meta and other data, for example :

WORD-FROM-THE-TRANSCRIPT

(from Julien Doran‘s MetaFragment proposal document)

Transcripts will contain a series of these marked up words each individual word containing enough data to describe it’s associated media and at which time in the media it occurs.

Note that copying the Hypertranscript into any text editor will result in valid HTML and so can be used in other tools and applications external to the Hyperaudio Pad.

It is likely that the user’s resulting transcript will reference several different pieces of media and so it may beneficial for the application to ‘load’ and possibly cache the various media sources in advance for smoothest possible playback. We can achieve this by parsing the transcript after every save.

Future developments could include a simple scripting language that users can insert between transcripts to make transitions smoother and even the possibility to add background music or sound effects for example:

[fade out over 5 seconds]

or

[background fade in 'threatening music' over 3 seconds]

and then at the bottom

[reference 'threatening music' http://soundcloud.com/some.mp3]

You may say I’m a Dreamer

In order to start using the Hyperaudio Pad to its full potential we first need our media to be transcribed and to do this we need to make tools that make this easier and if possible free. However I believe that hypertranscripts deliver so many benefits that the incentive to transcribe media is high.

The Hyperaudio Pad is just one of many tools that could be built on the underlying Hypertranscript platform. To build this platform we should collaborate with others to :

- establish a standard markup for transcripts

- make the platform easy to build upon and enhance as new technologies become viable

- create a community with a pioneering spirit

- develop an ecosystem to facilitate the creation of a toolkit

During the course of this project it’s been a pleasure to find many other participants interested in the general theme of describing/transcribing media and utilizing the result. Over the last couple of weeks I have been collaborating with Julien Dorra, Samuel Huron, Nicholas Doiron and Shaminder Dulai. The excitement has been palpable and although only communicating virtually it felt like we were sparking off each other.

So I guess at least as far as this dream is concerned, I’m not the only one. ![]()

Introducing the Hyperaudio Pad (working title)

Last week as part of the Mozilla News Lab, I took part in webinars with Shazna Nessa – Director of Interactive at the Associated Press in New York, Mohamed Nanabhay – an internet entrepreneur and Head of Online at Al Jazeera English and Oliver Reichenstein – CEO of iA (Information Architects, Inc.).

I have a few ideas on how we can create tools to help journalists. I mean journalists in the broadest sense – casual bloggers as well as hacks working for large news organizations. In previous weeks I have been in deep absorption of all the fantastic and varied information coming my way. Last week things started to fall into place. A seed of an idea that I’ve had at the back of my mind for some time pushed its way to the front and started to evolve.

Something that cropped up time and again was that if you are going to create tools for journalists, you should try and make them as easy to use as possible. The idea I hope to run with is a simple tool to allow users to assemble audio or video programs from different sources by using a paradigm that most people are already familiar with. I hope to build on my work something I’ve called hypertranscripts which strongly couple text and the spoken word in a way that is easily navigable and shareable.

The Problem

Editing, compiling and assembling audio or video usually requires fairly complex tools, this is compounded by the fact that it’s very difficult to ascertain the content of the media without actually playing through it.

The Solution?

I propose that we step back and consider other ways of representing this media content. In the case of journalistic pieces, this content usually includes the spoken word which we can represent using text by transcribing it. My idea is to use the text to represent the content and allow that text to be copied, pasted, dragged and dropped from document to document with associated media intact. The documents will take the form of hypertranscripts and this assemblage will all work within the context of my proposed application, going under the working title of the Hyperaudio Pad. (Suggestions welcome!) Note that the pasting of any content into a standard editor will result in hypertranscripted content that could exist largely independently of the application itself.

Some examples of hypertranscripts can be found in a couple of demos I worked on earlier this year:

Danish Radio Demo

Radiolab Demo

As the interface is largely text based I’m taking a great deal of inspiration from the elegance and simplicity of Oliver’s iAWriter. Here are a couple of rough sketches :

Edit Mode

Playback Mode

Working Together

Last week I’m happy to say that I found myself collaborating with other members of the News Lab, namely Julien Dorra and Samuel Huron, both of whom are working on related projects. These guys have some excellent ideas that relate to meta-data and mixed media that tie in with my own and I look forward to working with them in the future. Exciting stuff!

Accessibility, Community and Simplicity

In last week’s Mozilla News Lab we were treated to webinars from Chris Heilmann, John Resig and Jesse James Garrett.

Chris (a Technical Evangelist for Mozilla), took us on a useful whistle-stop tour of HTML5 and friends, technologies that I certainly wish to take advantage of in my final project. I feel that there’s so much potential yet to be tapped, it’s difficult to know where to start, but I’ll definitely be using the new audio and video APIs and looking into offline storage. Chris also touched on accessibility, something that I want to bake into any tool I come up with. To me accessibility is interesting, not just from the perspective of users with disabilities but also because I hope to do is make news (and the tools to create news) more accessible to all people.

John’s talk was fascinating to me as a project coordinator of a JavaScript library, relating directly to the News Lab I found it interesting that many successful projects are a product of both good tools and strong community. The community aspect cannot be underestimated and is perhaps more important than the quality of the tool or service (which can always be improved by the community). I spoke about ‘Community Driven Development’ this year at FOSSCOMM and so is close to my heart, but to hear this confirmed by someone who has experienced success at the very highest level was great. I am now convinced that whatever ever form my final project takes, it will not only be community driven but it will have community built in, which to me means:

- Providing forums and methods of communication and feedback with the initial release

- Building extensibility into the tool so people can add functionality via plugins

- Encouragement of collaboration – make it easy for people to get involved

- Making sure that the documentation is comprehensive and clear (and can be contributed to)

- Fanatical support and encouragement

I also attended the ‘extra’ webinar given by Jesse James. In some ways this was the most useful to me as it focussed on designing the user experience or UX, something I’m keen to learn more about and who better to tell me than the author of The Elements of User Experience. It was fascinating to take a peak at how UX experts like Jesse approached the subject of design. I sat there transfixed as he broke down the process and discussed ideas such as psychology, emotion and how the user feels and their relationship with a given product or tool. Jesse spoke about the personality of a product and how users relate to tools as they would another person, using similar parts of the brain as they do when relating to people.

I really want the tool I create to be as simple as possible to use, so my question to Jesse was :

“Is it possible to make a product so simple that it doesn’t need a personality, or needs less? Or is the simplicity its personality?”

The answer : “Simplicity is a personality.”

Great. Hopefully I’m on the right track then.

Build First, Ask Questions Later

As part of the Knight Mozilla News Challenge I’m taking part in, I attended two webinars. The first was given by Aza Raskin concerned prototyping and communication, the second the story of Storify and the Hacks/Hackers community by founder and journalist Burt Herman.

I found both these webinars extremely valuable, I had actually seen Aza’s talk in November in Barcelona when he spoke at the Drumbeat Festival, it was inspiring then as it is now. The main thrust being that prototypes are important as a way to get your idea out there, put it in front of people and iterate upon it! The Storify story gave a different perspective and I found myself agreeing with thoughts on following your passion, building community, teams and again getting your product out there!

However the point in Burt’s talk that really stood out for me, was his stressing of keeping things simple. The Minimum Viable Product approach is something which resonates strongly with me, I have to say I’m a big fan, but what he said next really struck home. Specifically in response to a question on how Burt and his team went from early prototypes to the current incarnation of Storify, he re-emphasised the iterative approach of and gathering feedback from early minimal releases … and then BAM – out it came, mentioned almost as an aside :

“We built this thing that made it easy to embed a tweet in a blogpost”

“so you could just type in the ID of a tweet and it would give you a nice formatted HTML thing”

Wow!

So beautifully simple, yet hugely powerful. And it was built by co-founder Xavier Damman in a day! Unsurprisingly TechCrunch picked it up almost immediately and many people started using it soon after. Once they realised what they had, they built upon it and worked it in to their main product. This for me was such a great example of what the ‘just build it’ mentality can achieve. Prior to that, they had been experimenting with slightly more complex systems, but they tried a few variations on a theme and with the help of good feedback they hit upon a fantastic solution.

I have a few ideas for applications for the ‘Unlocking Video’ challenge, I’m not entirely sure of any of them, but one thing’s for certain I’m going to get the very core of an idea out there early and then iterate wildly in response to as much feedback as I can gather, and hope as Burt and his team did, to strike gold.

Related resources :

Storify storified – SXSW Winners [webmission.be]

Storify Gets Funding from Khosla Ventures to Reinvent Media Online [gigaom.com]

Storify Raises $2M From Khosla Ventures To Blend Social Media With Storytelling [techcrunch.com]

Tweet embedding tool : http://media.twitter.com/blackbird-pie

The Storify Co-founders : Burt Herman (@burtherman), Xavier Damman (@xdamman)

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design