Introducing the Hyperaudio Pad (working title)

Last week as part of the Mozilla News Lab, I took part in webinars with Shazna Nessa – Director of Interactive at the Associated Press in New York, Mohamed Nanabhay – an internet entrepreneur and Head of Online at Al Jazeera English and Oliver Reichenstein – CEO of iA (Information Architects, Inc.).

I have a few ideas on how we can create tools to help journalists. I mean journalists in the broadest sense – casual bloggers as well as hacks working for large news organizations. In previous weeks I have been in deep absorption of all the fantastic and varied information coming my way. Last week things started to fall into place. A seed of an idea that I’ve had at the back of my mind for some time pushed its way to the front and started to evolve.

Something that cropped up time and again was that if you are going to create tools for journalists, you should try and make them as easy to use as possible. The idea I hope to run with is a simple tool to allow users to assemble audio or video programs from different sources by using a paradigm that most people are already familiar with. I hope to build on my work something I’ve called hypertranscripts which strongly couple text and the spoken word in a way that is easily navigable and shareable.

The Problem

Editing, compiling and assembling audio or video usually requires fairly complex tools, this is compounded by the fact that it’s very difficult to ascertain the content of the media without actually playing through it.

The Solution?

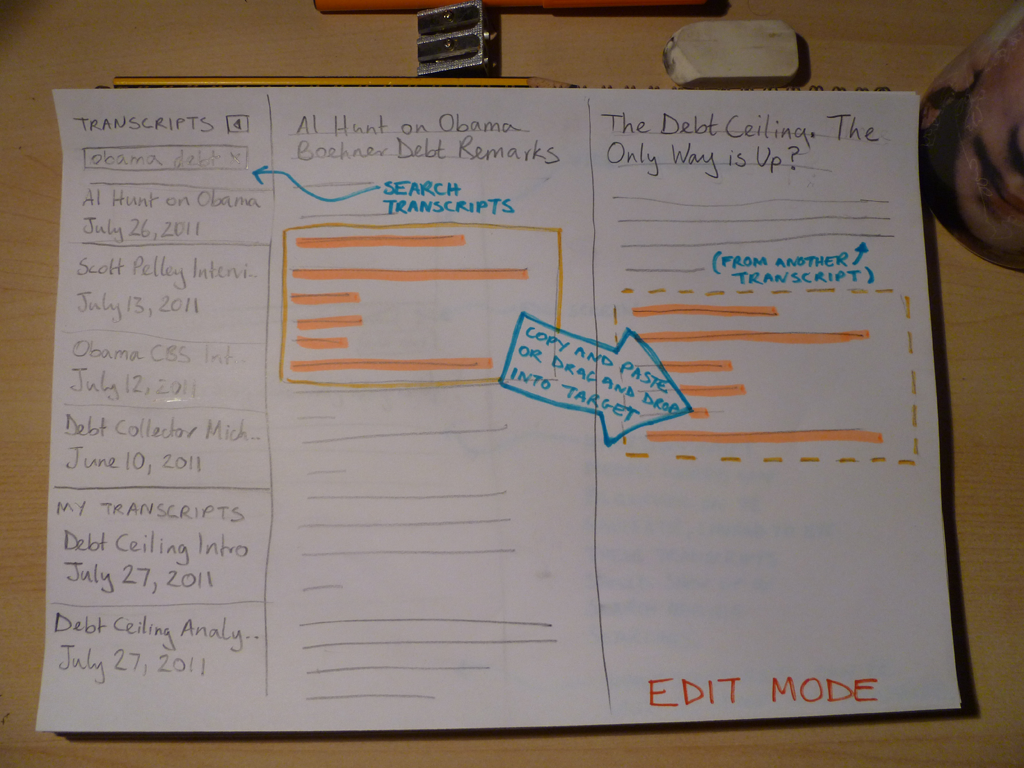

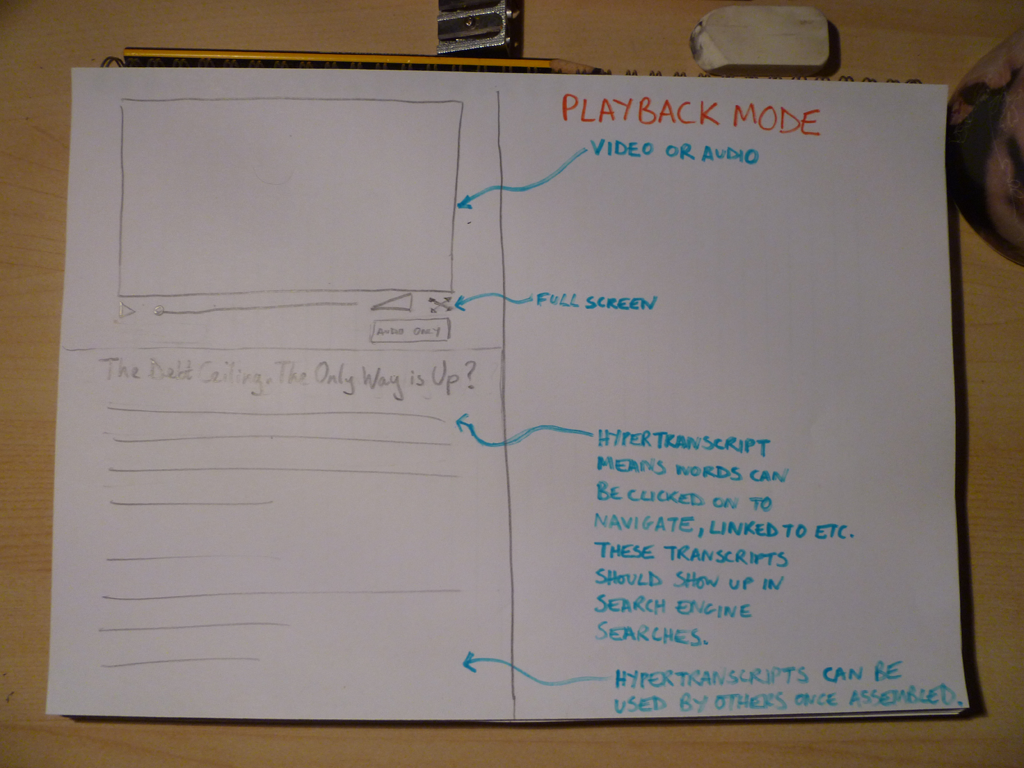

I propose that we step back and consider other ways of representing this media content. In the case of journalistic pieces, this content usually includes the spoken word which we can represent using text by transcribing it. My idea is to use the text to represent the content and allow that text to be copied, pasted, dragged and dropped from document to document with associated media intact. The documents will take the form of hypertranscripts and this assemblage will all work within the context of my proposed application, going under the working title of the Hyperaudio Pad. (Suggestions welcome!) Note that the pasting of any content into a standard editor will result in hypertranscripted content that could exist largely independently of the application itself.

Some examples of hypertranscripts can be found in a couple of demos I worked on earlier this year:

Danish Radio Demo

Radiolab Demo

As the interface is largely text based I’m taking a great deal of inspiration from the elegance and simplicity of Oliver’s iAWriter. Here are a couple of rough sketches :

Edit Mode

Playback Mode

Working Together

Last week I’m happy to say that I found myself collaborating with other members of the News Lab, namely Julien Dorra and Samuel Huron, both of whom are working on related projects. These guys have some excellent ideas that relate to meta-data and mixed media that tie in with my own and I look forward to working with them in the future. Exciting stuff!

5 Comments to Introducing the Hyperaudio Pad (working title)

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design

I love your idea to edite video by their transcript !

I think it’s will be usefull to find a design layout where the video is visible in the same time of the text ( for the editing). I take fear about the complexity to edit your text transcript in the bottom and edit it in the top ? It’s the same problem I try to point on the direct participation post .

The idea is awesome !

With your solution you solve two other problem : video indexation and the follow of metatdata during editing…

We can imagine a etherpad Hypertranscript

It’s make me think to this experiment but not oriented around editing .. :

http://voxaleadnews.labs.exalead.com/play.php?flv=France24_FR%2FWB_FR_NW_PKG_UPGATE_DETTE_US_SORTIE_DE_CRISE_NW419909-A-01-20110801.mp4&q=&language=all

http://liris.cnrs.fr/advene/examples/tbl_linked_data_html5/transcript.html

cheers

sam

http://dev.w3.org/html5/spec/video.html#timed-text-tracks

Thanks Samuel, those are useful links.

Some interesting examples of hypertranscripts, it’s good to see others working on the same ideas.

Timed Text Tracks are interesting, but I think I will go for the simplest possible approach just now, just so that it will work x-browsers. So many ways to advance in future though, another useful emerging technology is Media Fragments http://www.w3.org/TR/media-frags/

[...] part in the Mozilla Knight Journalism challenge where I was encouraged to research and blog some ideas on a tool I called the Hyperaudio Pad. Happily I was flown over to Berlin for a week of intense [...]

[...] if there was an application, Boas asked in a blog post earlier this year, that would help compile new videos or audio recordings based on the remix of this kind of [...]