The Hyperaudio Pad – Next Steps and Media Literacy

By Mark Boas

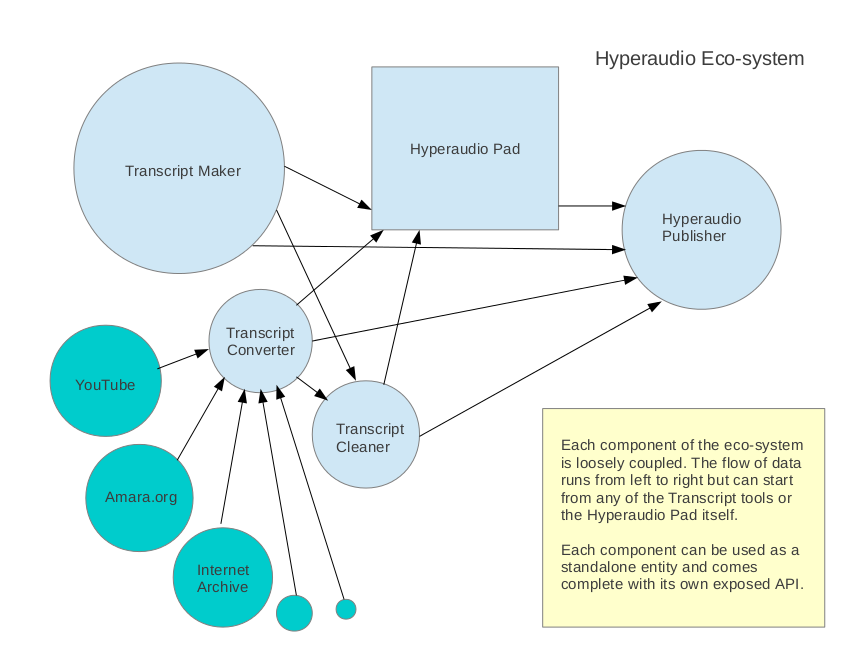

Hyperaudio is a term coined by Henrik Moltke. After discovering we had similar interests we started working on the concept a while back, resulting in a few demos such as the Radiolab demo for WNYC. That was a couple of years ago now. Since then I have been working with my colleague Mark Panaghiston on various Hyperaudio demos and thinking about how we can create an suite of tools I imaginatively call the Hyperaudio Ecosystem, at the center of that ecosystem sits the Hyperaudio Pad.

What is Hyperaudio?

But hey, let’s back up a bit. What is this Hyperaudio of which I speak? Simply put, Hyperaudio aims to do for audio what hypertext did for text – that is to integrate audio fully into the web experience. I wrote more about that here.

The Hyperaudio Pad is a tool that enables people to build up or remix audio and video using the underlying timed-transcripts. At the moment we’re concentrating on transcribing the spoken word.

Over the last seven days or so me and the other Mark have been working fairly solidly on taking the Hyperaudio Pad to the next level. It’s great to work with Mark P for several reasons. Obviously as key author of jPlayer he knows pretty much all there is to know about web based media, but also although we are both developers we are in many ways diametrically opposed. Mark is keen on code quality and doing things properly and I just want to get things out there. Happily the compromise we arrive at when we work together is a usually a good one. Mark is coming around to minimal-viable solutions and I have to admit that doing things right can save time in the long-run.

In fact there is a world of difference between earlier versions of the Hyperaudio Pad and that I largely developed and the version we have now after a week of collaboration.

Putting our weird Renée and Renato relationship aside for a bit, let’s talk a little about what it is that the Hyperaudio Pad is actually meant to do.

The Power of the Remix

OK, so key aims here are really to encourage the remixing of media in a new and refreshingly easy way – in doing so promote media literacy. On the subject of remixing at its importance in counter-culture I’d encourage you to check out RIP! : A Remix Manifesto and the excellent Everything is a Remix series.

Actually Brett Gaylor of Remix Manifesto fame is doing similar work by leading the effort building sequencing into Popcorn Maker. We’re approaching the same objective from completely different angles.

Although we use the Popcorn.js library at the heart of the Hyperaudio Pad, we use text to describe and represent media content. This may seem counter intuitive but actually transcripts can be a great way to navigate media content. Transcripts help break media out of it’s black box and we can scan and search through it relatively quickly. The Hyperaudio Pad borrows from the word-processor paradigm allowing people to copy blocks of transcript and associated media and further, allowing them to describe transitions, effects, adjust volumes using natural language that resemble editing directions in a script.

Media Literacy

As an example of some transitions and effects you can currently insert between clips:

[fade through black over 2 seconds, apply effects nightvision, scanlines, tvglitch] currently works.

which is equivalent to:

[fade black 2 apply nightvision scanlines tvglitch]

but we want to encourage the user to be descriptive as these directions are both human and computer readable.

Other effects include : ascii, colorcube, emboss, invert, noise, ripple, scanlines, sepia, sketch, vignette

This system will evolve of course, and we hope to allow stuff like:

[change volume to 50% over 3 seconds, change brightness to 20% over 2 seconds, play track 'love me do' from 30 seconds to 35 seconds, change track volume from 0 to 80% over 2 seconds]

(stuff like that)

Transcript snippets, combined with text described transitions and effects act as a kind of source code for the media.

Weak References

As cuts are nothing more than weak references to media files, start and end times and effects that are simply layered — in real-time — over the top, we can make additive remixes as easily as we can subtractive ones, that is to say, somebody could take a remix and enhance it by peeling back a specific snippet to reveal more, thereby adding to the piece.

In fact a key goal here is to allow remixing of remixes.

So a bit about more about the technology powering this. Effects are handled by Brian Chirls‘ seriously cool Seriously.js – a comprehensive video compositing library. The great thing about Seriously.js is that it is a modular system. We can add effects (and load their code dynamically) as we go. I also look forward to using some of our experience gained with the Web Audio API to apply realtime audio effects. The wider vision is to make a system that will take raw media, transcribe it and allow you to do all the things you could do with a professional video editing package.

We’ve got plenty more to do, certainly some bugs to iron out, but we’re steadily approaching beta. A lot has been restructured behind the scenes, giving us a more stable platform to build on and more quickly enhance. The question I posed and answered on Twitter. What do you call something between alpha and beta? Alpha.

Going Forward

We still plan to add the following features in the near future :

- alter brightness, contrast, saturation etc

- control and fade volume

- add audio track that can be played simultaneously or sequentially

- add effects/transitions in paragraph etc

- allow editing right down to the word, delete words

- allow fine tuning of start and end points

- allow the playback and sharing of fullscreen video remixes with an option to see the underlying ‘source code’.

If you want to play around yourself you can find the latest demo here.

Sourcecode is on GitHub (I should get around to adding an MIT license or something)

Or you can watch a screen recording of where we were at as of yesterday. Apologies for the choppy quality, oh and the background music, it seemed a good idea at the time.

1 Comment to The Hyperaudio Pad – Next Steps and Media Literacy

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design

[...] Boas interactively edits a video as simply as a text document, inserting effects right into its transcrip…. Check the compelling demo here [...]