Breaking Out – The Making Of

By Mark Panaghiston.

Back in the spring of 2012 we started working with the BBC on a project called Breaking Out, which was a short story that demonstrated the concept of Perceptive Media.

“Perceptive Media, takes narrative back to something more aligned to a storyteller and an audience around a campfire using internet technologies and sensibility to create something closer to a personal theatre experience in your living room.”

With this article we want to look at the technical aspects in greater detail than our previous blog post on the subject.

This Time It’s Personal

Making the content unique for each viewer was no easy task. First we needed to get some information about the viewer and then integrate it into the story.

We had these sources of information available to us:

- geo-location - with authorization

- social networks - if they were logged in

- today’s date

From the geo-location information, we were able to determine the local:

- city

- attractions

- restaurant

- bar

- weather

The social network information checked to see if the user was logged into the following:

- gmail

- digg

We also gathered information for a recent:

- news report podcast

- comedy movie title

- horror movie title

Where appropriate, the information was inserted into the storyline.

The storyline was written by Sarah Glenister and she explained that the process was difficult because usually a story ark depends on the content of the story. So if it is raining, the story may remark on the damp conditions or wet hair, but with Perceptive Media the story arks had to be rather benign to accommodate a variety of situations. This was in part due to the story remaining linear, rather than becoming a tree structure as in an adventure game.

There’s not much Choice of Technology

Since this project had a modest budget, the dynamic voice generation was limited to one character, the Lift. This allowed us to use the speak.js library, where the artificial voice worked well with the non-human Lift character. The static lines spoken by the Lift were generated and stored in audio files so that they matched with the dynamic parts.

The production required that the audio had affect applied to them so that, for example, they sounded like there were happening in a hallway or in a lift environment. Some sounds would require timing alignment relative to other sounds, such as music getting louder when a door opens. The timing of the whole story would also be adjustable to complicate matters, along with being able to control the volume of the various tracks through a control panel.

To manipulate the audio, we saw the choices:

The Audio Data API is a low level API that gives access to the raw audio data as it plays and as such it allows you to do pretty much anything you want, but does not give you any of the tools to do it with. You are also limited by how much your CPU can crunch in the time between sample blocks.

The audiolib.js by Jussi Kalliokoski appeared to be a contender since it enabled a cross-browser solution, Firefox and Chrome, but it fell foul to the size of the data processing requirement and that WAV files would be required as input. Initial investigations into the convolution effect indicated that it would take approximately 20 minutes of processing to apply, which is unreasonable. There were other issues, such as changing the gains would require reprocessing the whole track and changing the timings would require similar reprocessing of the faders.

The Web Audio API was the most straightforward solution. It had a large number of tools built into it, and the input could take mp3 data or be connected to an HTML5 Audio Element. The node based system allowed complicated audio networks to be implemented in a relatively simple manner. And finally, the API was real-time, in that the audio sent to a node had the effects applied to it on the fly.

We decided to go ahead with the Web Audio API implementation, but since this would only satisfy Chrome (at that time) we implemented a fallback solution that played the original audio through jPlayer. This decision was backed up shortly afterwards when it was decided that the future W3C standard for advanced audio will be based on the Web Audio API.

We want more Control

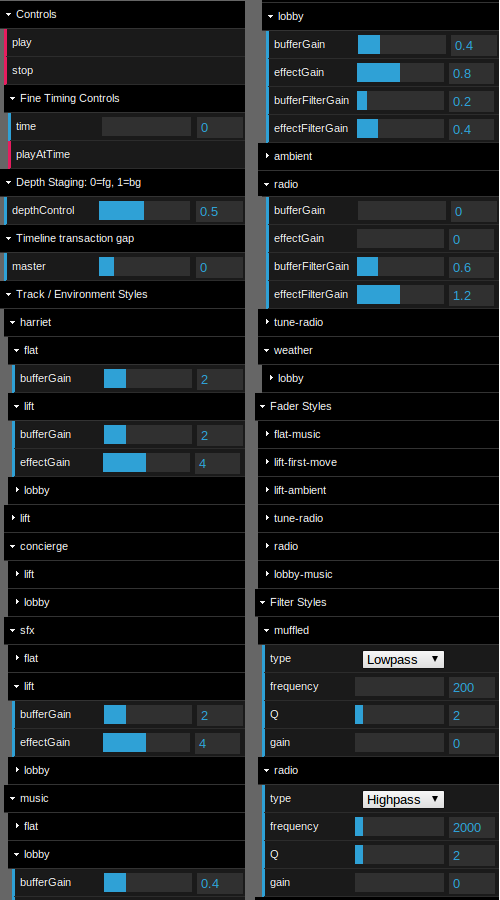

To complicate matters the system would have a hidden Easter Egg that enabled control over the vast majority of the variables. The Easter Egg would enable the control of:

- timing

- depth staging

- track volume

- faders

- filters

To open the Easter Egg, Breaking Out must have finished loading and then click under the last 2 of the copyright 2012 on the bottom right.

You’ll then have access to the Control Panel.

The control panel uses dat.GUI by Chrome Experiments.

Let’s give the Audio some Style

First we needed to develop a system to define the styles for each of the audio characteristics. With the following four style types we were able to define every situation required for the story:

- track / environment

- faders

- filters

- convolution effects

The track/environment styles were used to define the specific track styles to a given environment. Each track was not normalized with the others, so we needed to be able to control the gain of each track independently. We also needed to define the audio properties to apply, for example, when Harriet is speaking in the lift environment.

myPM.setTrackEnv('harriet', 'lift', {

effect:1,

bufferGain: 2,

effectGain: 4,

bufferFilterGain: 0,

effectFilterGain: 0,

depth: 'foreground'

});

The other styles were defined in a similar simple manner to enable their use throughout the code while having a common point of control. For example, the filter style:

myPM.setFilter('muffled', {

type: 0, // Lowpass

frequency: 200,

Q: 2,

gain: 0

});

The Audio’s got Assets

Breaking Out has around 200 individual audio assets that are put together on the client to complete the production. Each asset needed to define its audio file, its text, its relationship to the rest of the assets and so on.

An example asset:

{

track: 'harriet',

env: 'flat',

text: "Ok, I can do this...",

url: media_root + 'harriet/#FMT#/h1.#FMT#'

}

The Asset class is responsible for setting up each audio asset ready to be played. This involves preparing the asset for use with either the Web audio API or the jPlayer fallback, loading in the audio data when prompted and populating the asset information, such as the duration. The asset can then be played when requested, bearing in mind that each asset expects to be played in the future. For example, an asset plays at time 120 seconds in the timeline, so if you tell it to play(0) it will play in 2 minutes, and telling it to play(120) will make it play now. Additionally, using the granular noteGrainOn Web Audio API command, the asset can start part way through.

The Asset class starts at line 737 in perceptive.media.js

Arranging it all into a Timeline

The majority of assets were collected together in timelines, where the code would position them relative to each other on the absolute timeline. Branches were used to add assets that occurred in parallel to other assets, where the branch asset timings were linked to the assets in the main timeline. For example, a piece of music playing between two points may start relative to one asset and stop relative to another asset.

The master timeline assets are defined in an array starting at line 143 in breaking.out.js

Branches were associated with master timeline assets by giving the master timeline asset an ID. The branch then used this ID as a reference point for its timings.

The branch assets are defined in a (much smaller) array starting at line 1542 in breaking.out.js

Relative Timings Please. Absolutely!

The Web Audio API has many strengths and in our case, a weakness. For example, if we were making a game, then we can easily trigger a sound to play when you click a button to launch a rocket. In our case, we wanted to line up hundreds of audio pieces to play at the right time. This means that when you start the audio, everything must be in place and ready to queue up on the Web Audio API.

This explains the load bar at the start. All the assets must load in so that they are ready to be played and their duration is known, and in turn their relative relationships calculated into an absolute time. This absolute time is then used to queue the asset to play in the future.

The control panel complicated this part, due to 2 of its functions. The user may start playback from any point in the timeline. The user may change the time gap between each relative asset, which causes the absolute timings to be recalculated.

Lost in the Audio Map

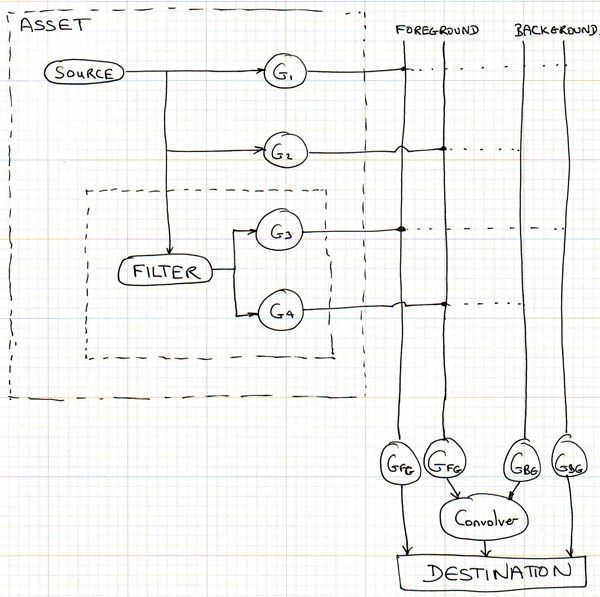

The audio node map for each asset was not as complicated as you might think.

Every asset has two gain nodes connected to its audio source. One gain node is connected to the output and the other gain node is connected to the convolver. If the asset used a filter, this it was created inside the asset and then two more gain nodes used to pass the filtered audio source to the output and to the convolver.

The output path had two depth layers, the foreground and the background. Each asset was connected to one of these output paths, which allowed a depth gain to be applied to the audio.

The convolution effects were also created as assets, but were treated differently from the rest of the assets. These effect assets created 2 gain nodes internally to accommodate the foreground and background layers.

A few points to note here.

- There were only two convolution assets used for the whole project, one each for the lift and lobby environments. The BBC provided the Impulse Responses required for the convolver node.

- During development, we found that attempting to use more than 14 convolver nodes started to use all the CPU. The problem was found due to putting the convolver into the asset to begin with, rather than having one per environment and route the assets audio to them.

- The output depth layer gain nodes have hundreds of assets connected to them.

- The filters inside the assets are only created if required, and excessive use would require a different audio map solution to be implemented, where assets could share filters. As only 2 assets required filters, we implemented them inside the assets.

Conclusions

The Web Audio API satisfied the goals of the project very well, allowing the entire production to be assembled in the client browser. This enabled control over the track timing, volume and environment acoustics by the client. From an editing point of view, this allowed the default values to be chosen easily by the editor and have them apply seamlessly to the entire production, similar to when working in the studio.

One of the most complicated parts of the the project was arranging the asset timelines into their absolute timings. We wanted the input system to be relative since that is a natural way to do things, “Play B after A”, rather than, “Play A at 15.2 seconds and B at 21.4 seconds.” However, once the numbers were crunched, the noteOn method would easy queue up the sounds in the future.

The main deficiency we found with the Web Audio API was that there were no events that we could use to know when, for example, a sound started playing. We believe this is in part due to it being known when that event would occur, since we did tell it to noteOn in 180 seconds time, but it would be nice to have an event occur when it started and maybe when its buffer emptied too. Since we wanted some artwork to display relative to the storyline, we had to use timeouts to generate these events. They did seem to work fine for the most part, but having hundreds of timeouts waiting to happen is generally not a good thing.

And finally, the geo-location information was somewhat limited. We had to make it specific to the UK simply because online services were either expensive or heavily biased towards sponsored companies. For example, ask for the local attractions and get back a bunch of fast food restaurants. But in practice though, you’d need to pay for a service such as this and this project did not have the budget.

As Harriet said, “OK, I can do this.” And we did!

Download Perceptive Media at GitHub.

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design