SEO

A few HTML5 questions that need answering

Challenge Accepted

Earlier this week Christian Heilmann posted a talk and some interesting questions on his blog. Perhaps foolishly I decided to accept the challenge of answering them and what follows are my answers to his questions. My hope is to stimulate a bit of civilised debate and so I hope that people challenge what I have written. I’ve tried not to be too opinionated and qualify things with ‘I think’, ‘probably’ etc where possible, but these are inevitably my opinions (and nobody else’s) and should be interpreted as just that.

I think Christian has done a great job putting these questions together – if they make other people think as much as they made me think they’ve certainly been worthwhile. Like many of life’s great questions the answers don’t always boil down to yes and no. In fact usually there is a grey area in which a judgment call is required, and so I apologise in advance if some of the answers are simply ‘it depends’.

Can innovation be based on “people never did this correctly anyways”?

Yes, I’d say that innovation can be based on almost anything. It’s probably better if innovation is based on solid foundations, however I believe the web is more of a bazaar than a cathedral and so solid foundations aren’t always feasible.

If we look at some older browsers where let’s say the spec was interpreted in an unexpected way, take specifically IE5.5 and the CSS box model – hacks are required to get around its ‘creative’ implementation and due to the huge win of being able to write cross-browser web-pages with the same markup, hacks are almost always found. Often hacks by their very nature will be ugly, but this can help serve to remind us of their existence.* Once the hack is found innovation continues again at its usual pace. I’d argue that innovation on the web is built on a series of (often ugly) hacks.

To answer the question in the context of HTML5′s tolerance for sloppy markup, it’s interesting to note that HTML5 does allow you to markup as XHTML if you so choose, personally and maybe because I’m over fastidious, this is what I try to do. Whether the browser picks up my mistakes or just silently corrects them is another issue. Modern editors’ text highlighting helps a lot but if you are taking your markup seriously you may want to validate it. At the end of the day innovation will take place regardless.

*http://tantek.com/log/2005/11.html

Is it HTML or BML? (HyperText Markup Language or Browser Markup Language)

I think HTML should be accessible to more than what we traditionally view as the browser, yes. From an accessibility standpoint it should be a responsibility to make sure that pages are reasonably accessible from a range of devices such as screenreaders. What ‘reasonably accessible’ means could be cause for considerable debate. Additionally it is in most people’s interest to make their pages readable by a search-engine bot. Some developers may find that they want their pages to be parseable in which case they should probably mark them up in XHTML.

I think though, that the most effective way to encourage people to mark up their pages for devices other than browsers is to make clear the benefits of doing so. Search bots and screenreaders will eventually catch up and I think at that point we will be in a better place.

On a side note hypertext is what the web is founded on – it’s the essence of the web if you like. If you stripped down the web to just hypertext you’d still have a web, albeit a limited version of what we have today. Hypertext is really just text or documents endowed with the ability to link to other documents. This ability to link is of course extremely powerful and enables anyone to create a web page that is instantly ‘part’ of the web. Some companies are starting to rely less on the humble hyperlink and invent markup for themselves that relies on JavaScript to actually work, Facebook’s FBML is an example of this and I would perhaps put this ‘extension’ of HTML in the BML camp.

But while so much has been added to HTML, the hyperlink still remains and developers are encouraged to use it, even if in some cases it is just a fallback for more complex interactions. Only the other day we experienced the fallout from Google’s hashbang method of ajaxifying a link, I feel that a large part of this stemmed from the fact that developers felt that they no longer had to populate their hrefs, but in reality it is still highly recommended they do. So no matter how complex a web application becomes I’d argue that it should always be based fundamentally on the good old hyperlink in which case, strictly speaking it would be HTML.

Should HTML be there only for browsers? What about conversion Services? Search bots? Content scrapers?

Some would argue that information published publicly on the web is there to be consumed by however or whatever chooses to consume it. However a semantic web not only makes content more accessible to humans but also to algorithm running computers that seek to diseminate and categorise the wealth of information out there, this new information could then be presented back to a human and so the wheel turns and the machine cranks on. Again I feel developers should be shown the benefits.

Touching again on accessibility, laws exist and I think rightly so, to mandate that certain websites should be accessible to all, just as they do for certain buildings especially public ones. Whether your house or say a merry-go-round should be accessible is less clear. During the development of jPlayer we decided to make elements of it accessible because we felt it was the right thing to do, but the very first versions weren’t. The ‘right-thing-to-do’ can often be more a powerful motivator than any rule, especially if it is difficult to enforce.

Should we shoe-horn new technology into legacy browsers?

I think we should aim to support as many legacy browsers as reasonably possible – yes. What is ‘reasonably possible’ has always been a contentious issue but I think most developers broadly agree on what this means.

A key decision is how consistent you want each browser’s interpretation of your web page or application to be. Note that the experience will never be identical across all browsers, even the modern ones, so a certain degree of comprimise is always required. I really just view the shoe-horning of new technology into legacy browsers as just another hack, although instead of correcting a misimplemented feature its aim is to augment the legacy browser’s feature set to more closely match newer browsers.

Do patches add complexity as we need to test their performance? (there is no point in giving an old browser functionality that simply looks bad or grinds it down to a halt)

If we take patches to mean any code styling or markup that can adequately add missing functionality to a browser, (are also referred to as shims, shivs or more recently polyfills), yes patches do add complexity, but well written patches that can easily be dropped into a project should mask the complexities from the web developer. You could argue that patches are essential if we are to start using new technologies like HTML5 in the wild. Again a decision needs to be made as to whether a patch slows down a browser unacceptedly, whether to fallback to a less complete solution for legacy browsers or whether not to use the new technology at all yet.

How about moving IE fixes to the server side? Padding with DIVs with classes in PHP/Ruby/Python after checking the browser and no JS for IE?

The trouble with moving fixes to the server-side is that, in my experience at least, front-end developers and especially designers (whose lives these fixes affect) like to be able to develop applications with as little contact with the server as possible. Some web pages don’t even require any real server-side interaction and so we would be creating an added burden to the designer or developer’s development cycle. That said if the web developer could continue to work as usual on a web application that relied on the server-side anyway, safe in the knowledge that the server would take care of any differences then I suppose that would be one possible solution.

Another issue is server-side solutions tend to be less transparent and this could cause unneccessary resistance to those who might seek to change and evolve these fixes. You would also have to deal with the situation where several server-side fixes existed (PHP/Ruby/Python etc), the exception perhaps being mods to the actual webserver (although webservers do vary).

It is true that developers or designers are unlikey to work without a webserver these days but on balance I think there are a lot of issues to be resolved before all but the simplest fixes get moved to the server-side. So I’d say some IE fixes on the server-side could well make sense, anything more complex probably isn’t warranted.

Can we expect content creators to create video in many formats to support an open technology?

I don’t think we can expect content creators to create a video in many formats to support a certain technology just because it is open. It’s more likely to depend on the content creators and the market that they are appealing to – whether they are creating a true native cross-platform solution or whether they are willing to fallback to say Flash to deliver content that a browser doesn’t natively support. I think this is an area where some sort of server-side video format conversion module could be useful in encouraging developers to support native video in every browser. Ironically any tool that converts to or from certain types of encoded video could be subject to royalties (I’d love to be proved wrong here). A content creator could decide to support only open video formats but for example if H.264 is assumed not to be open, the big question is how do you create a video fallback for iOS whose browser doesn’t support Flash or similar.

Can a service like vid.ly be trusted for content creation and storage?

The question that immediately springs to mind is what happens if vid.ly goes down? You are essentially introducing another point of failure and it’s probably a good idea to keep these to a minimum, depending of course on how crucial it is that that content is always available. You could also argue that depending on CDNs to host your favourite JavaScript library is a bad idea so I guess it’s a judgment call on how likely it is that a particular service is to go down. Leaving aside the issue of reliability, what happens if a service like vid.ly gets bought out or hacked or decide to change their policy? All these are important questions that we’d probably do well to ask ourselves before relying on such a service.

Content creation could be a winner though, in the sense that it would be nice to upload one format and several other formats be made available to you. It’s a bit unfashionable but I sometimes wonder whether the WordPress model of being able to download and drop in a useful module on to your LAMP stack makes more sense. I’d love to be able to host my own personal version of vid.ly for use on my website only – it also makes sense from a distributed computing point of view.

Is HTML5 not applicable for premium content?

If we take premium content to mean content available for a fee, it is certainly more difficult to protect that content from being downloaded with HTML5. Being able to view source is arguably one of the most important aspects of open technology and HTML5 is a group of open technologies. However there is almost always a way to grab premium content whether it is based upon open technology or not, so at the end of the day we’re really talking about how difficult you make this.

Mark B

The Future of Web Apps – Single Page Applications

The world wide web is constantly evolving and so is the way we write the applications that run upon it. The web was never really designed as a platform for today’s applications, nevertheless we continue to bend it to our will.

Due to differing paradigms we are forced to design our web apps in a completely different way to native apps. Some of the most obvious constraints are those imposed by using the traditional multiple page model, when employed this model clearly illustrates the difference in performance between native and web apps. Many developers will probably feel that the multi-page approach is the price we pay for having search engine indexable, back-button supporting, bookmarkable and accessible applications.

The price is high.

In this article I propose that we can have our cake and eat it. We can have all the benefits of a multi-page web application in a single page. The objective then, is to outline the advantages of this approach and to describe its implementation. To do this I’ve taken an application that is dear to my heart, the humble audio application, and have tried to resolve one of the main issues – the uninterrupted playback of audio throughout.

But first let’s take a look at the advantages of a single-page approach. What it can do.

1. Make your app as ‘snappy’ as a native app.

What we are essentially talking about here is having an application where ‘virtual pages’ are loaded into one single web-page, which means switching between pages need not involve a trip to the server and so the switch occurs almost instantly.

2. Reduce load on your server.

Using the ‘traditional’ approach we load a lot of duplicated content for each page we visit. A single page approach avoids this repetition and so makes your application more efficient to code and run.

3. Give yourself more freedom.

When all ‘pages’ are accessible from one page you give yourself more freedom to manipulate the content of these ‘pages’ client-side. You can easily take content from one page and insert into another. You also have the option of keeping certain aspects of your application ‘fixed’, that is to say that state can be easily preserved as you don’t need to store/retrieve state between page reloads anymore.

We can do all this and still have a site that allows full SEO (Search Engine Optimization) – one of the biggest concerns when developing this type of app.

Making it Work

OK so hopefully I’ve convinced you of the benefits – lets talk a little about how we can achieve them.

I’m assuming that for a web application we are using some kind of server-side language. In this example I’m using PHP. We could also do with some client-side goodness and for that I’m using jQuery.

Note that the approach I use in this example falls back to a traditional multi-page approach when JavaScript is not available. You can call this progressive enhancement or graceful degradation depending upon your perspective. Either way this means that there is no reason that you cannot make your application fully accessible.

Next let’s take a look at the example application. I’ve kept it fairly simple while implementing some functionality which lends itself to the single page approach. We have three ‘virtual pages’ that can be accessed via tabs. Each of these pages displays a tracklisting of an album. You can switch between these albums quickly and easily as the content is already loaded, you can also sample the tracks or add them to a playlist. On the right hand side we have a music player with an integrated playlist. You can keep this player playing throughout as state is easily maintained.

Try the Demo

Click on a few tabs and try out the back buttons, reload the page at any given time and that ‘tab’ state should be maintained, which means that each of the containing pages are bookmarkable. If you like, you can disable your browser’s JavaScript and revisit the link http://happyworm.com/jPlayerLab/single-page-app/ – you should notice that the site falls back to the traditional multi-page model. This incidentally is what a search engine crawler will see, which explains why the content is ‘indexable’. Obviously our jPlayer plugin for jQuery (the bit that handles the audio) will not work with JS disabled however you could take the non JS version as far as you wanted, perhaps using HTML5 audio to allow the user to play tracks in-page.

OK so now you know what it can do, let’s take a look at how it works. I’ve tried to create this example in the most efficient manner possible but I’m sure there is room for improvement. One of the most important aims for me was the non-repetition of code or content. If you change the content it is changed for both non-JS and JS version alike. I’ve taken this quite far but for the sake of simplicity a small amount of repetition remains.

Application Outline

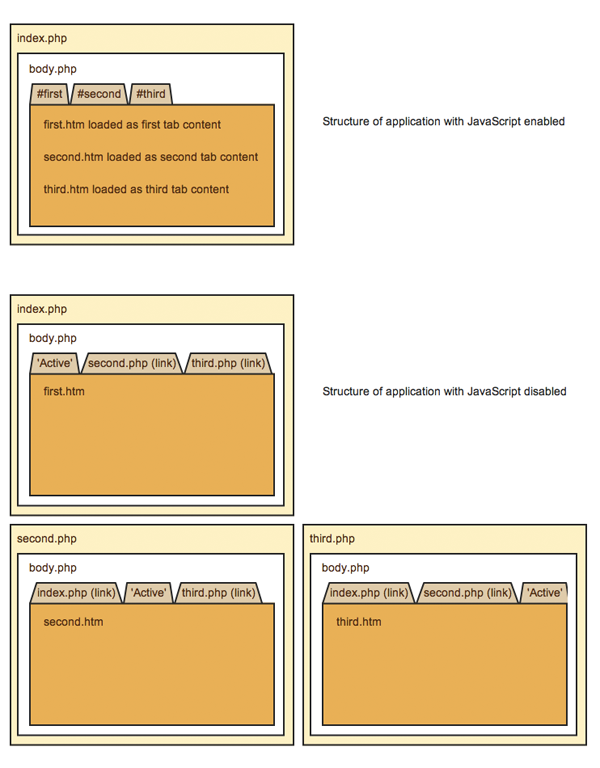

1. index.php is called and body.php loaded into it.

2. If JS disabled body.php links to first.php, second.php and third.php which in turn contain body.php. So we have 3 separate pages. body.php also loads in first.htm, second.htm and third.htm depending on the tab parameter passed.

3. If JS enabled body.php loads first.htm, second.htm and third.htm into tabs (via the JS at the top of index.php).

So effectively, when JS is enabled we are loading all the tab’s contents into the same page. The tabs link to separate pages which load that same tab content, but of course these links are ignored when JS is enabled (because we return false from their click handlers).

With me? If not you may want to take a look at the source.

But what about back-button functionality, page state and bookmarkability? Fortunately this is all taken care of by Ben Alman’s excellent BBQ plugin We add the page name to a hash in the URL and BBQ pretty much takes care of the rest.

Incidentally this approach will work in all major browsers including IE6 and upwards. It even works on the iPad. I may be missing something but I don’t see why every web application shouldn’t be created in this way. Sure, there is a small amount of added complexity, and perhaps the initial page load takes slightly longer, but that seems like a reasonable price to pay.

Thanks to Ben Alman and thanks to Remy Sharp for providing the inspiration for the tab functionality.

Grab the Source

[Edit : 2010-9-1 Changed the source code to include fixes.]

Previous Posts

- The Hyperaudio Pad – Next Steps and Media Literacy

- Breaking Out – The Making Of

- Breaking Out – Web Audio and Perceptive Media

- Altrepreneurial vs Entrepreneurial and Why I am going to Work with Al Jazeera

- HTML5 Audio APIs – How Low can we Go?

- Hyperaudio at the Mozilla Festival

- The Hyperaudio Pad – a Software Product Proposal

- Introducing the Hyperaudio Pad (working title)

- Accessibility, Community and Simplicity

- Build First, Ask Questions Later

- Further Experimentation with Hyper Audio

- Hyper Audio – A New Way to Interact

- P2P Web Apps – Brace yourselves, everything is about to change

- A few HTML5 questions that need answering

- Drumbeat Demo – HTML5 Audio Text Sync

Tag Cloud

-

Add new tag

AJAX

apache

Audio

band

buffered

Canvas

CDN

chrome

community

custom tags

firefox

gig

HTC

HTML5

Hyper Audio

internet explorer

java

javascript

journalism

jPlayer

jQuery

jscript

LABjs

leopard

media

Mozilla

MVP

opera

opera mini

osx

P2P

Popcorn.js

poster

prototyping

rewrite

safari

Scaling

simplicity

SoundCloud

timers

tomcat

video

Web Apps

web design